There is a lot to be said about the Dell EqualLogic Multipathing Extension Module (MEM) for vSphere. One is that it is an impressive component that without a doubt will improve the performance of your vSphere environment. The other is that it often not installed by organizations large and small. This probably stems from a few reasons.

- The typical user is uncertain of the value it brings.

- vSphere Administrators might be under the assumption that VMware’s Round Robin will perform the same thing.

- The assumption that if they don’t have vSphere Enterprise Plus licensing, they can’t use MEM.

- It’s command line only

What a shame, because the MEM gives you optimal performance of vSphere against your EqualLogic array, and is frankly easier to configure. Let me clarify, easier to configure correctly. iSCSI will work seemingly okay with not much effort. But that lack of effort initially can catch up with you later; resulting in no multipathing, poor performance, and possibly prone to error.

There are a number of good articles that outline the advantages of using the MEM. There is no need for me to repeat, so I’ll just stand on the shoulder’s of their posts, and provide the links at the end of my rambling.

The tool can be configured in a number of different ways to accommodate all types of scenarios; all of which is well documented in the Deployment Guide. The flexibility in deployment options might be why it seems a little intimidating to some users.

I’m going to show you how to set up the MEM in a very simple, typical fashion. Let’s get started. We’ll be working under the following assumptions:

- vSphere 5 and the EqualLogic MEM *

- An ESXi host Management IP of 192.168.199.11

- Host account of root with a password of mypassword

- a standard vSwitch for iSCSI traffic will be used with two physical uplinks (vmnic4 & vmnic5) The vSwitch created will be a standard vSwitch, but it can easily be a Distributed vSwitch as well.

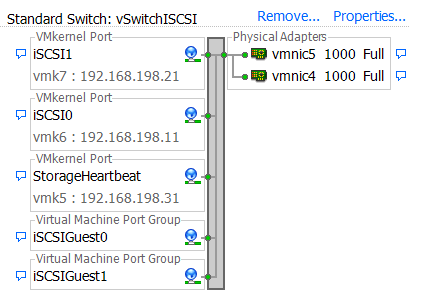

- Three IP addresses for each host; two for iSCSI vmkernels (192.168.198.11 & 192.168.198.21), and one for Storage Heartbeat. (192.168.198.31)

- Jumbo frames (9000 bytes) have been configured on your SAN switchgear, and will be used on your ESXi hosts.

- A desire to accommodate VMs that used guest attached volumes.

- EqualLogic Group IP address of: 192.168.198.65

- Storage network range of 192.168.198.0 /24

When applying to your environment, just tailor the settings to reflect your environment.

Download and preparation

1. Download the MEM from the Dell EqualLogic customer web portal to a workstation that has the vSphere CLI installed

2. Extract the MEM so that it resides in a C:\MEM directory. You should see a setup.pl file in the root of C:\MEM, along with a dell-eql-mem-esx5-[version].zip Keep this zip file, as it will be needed during the installation process.

Update ESXi host

1. Put ESXi host in Maintenance Mode

2. Delete any previous vSwitch that goes into the pNICs for iSCSI. Will also need to remove any previous port bindings.

3. Initiate script against first host:

setup.pl –configure –server=192.168.199.11 –username=root –password=mypassword

This will walk you through a series of variables you need to enter. It’s all pretty straightforward, but I’ve found the more practical way is to include it all as one string. This minimizes mistakes, improves documentation, and allows you to just cut and paste into the vSphere CLI. The complete string would look like this.

setup.pl –configure –server=192.168.199.11 –vswitch=vSwitchISCSI –mtu=9000 –nics=vmnic4,vmnic5 –ips=192.168.198.11,192.168.198.21 –heartbeat=192.168.198.31 –netmask=255.255.255.0 –vmkernel=iSCSI –nohwiscsi –groupip=192.168.198.65

It will prompt for a user name and password before it runs through the installation. Near the end of the installation, it will return:

No Dell EqualLogic Multipathing Extension Module found. Continue your setup by installing the module with the ‘esxcli software vib install’ command or through vCenter Update Manager

This is because the MEM VIB has not been installed yet. MEM will work but only using the default pathing policies. The MEM VIB can be installed by typing in the following:

setup.pl –install –server=192.168.199.11 –username=root –password=mypassword

If you look in vCenter, you’ll now see the vSwitch and vmkernel ports created and configured properly, with the port bindings configured correctly as well. You can verify it with the following

setup.pl –query –server=192.168.199.11 –username=root –password=mypassword

But you aren’t quite done yet. If you are using guest attached volumes, you will need to create Port Groups on that same vSwitch so that the guest volumes can connect to the array. To do it properly in which the two vNICs inside the guest OS can multipath to the volume properly, you will need to create two Port Groups. When complete, your vSwitch may look something like this:

Take a look at the VMkernel ports created by MEM, you will see the NIC Teaming Switch Failover Order has been set so that one vmnic is set to “Active” while the other is set to “Unused” The other VMkernel port has the same settings, but with the vmnics reversed in their “Active” and “Unused” state.The Port Groups you create for VMs using Guest attached volumes will take a similar approach. Each Port Group will have one “Active” and one “Standby” adapter (“Standby” not “unused” like the VMkernel). Each Port Group has the vmnics reversed. When configuring a VM’s NICs for guest attached volume access, you will want to assign one vmnic to one Port Group, while the other is assigned to the other Port Group. Confused? Great. Take a look at Will Urban’s post on how to configure Port Groups for guest attached volumes correctly.

Adjusting your existing environment.

If you need to rework your existing setup, simply put each host into Maintenance Mode one at a time and perform the steps above with your appropriate information.Next, take a look at your existing Datastores, and if they are using one of the built in Path Selection Policy methods (“Fixed” “Round Robin” etc.), change them over to “DELL_PSP_EQL_ROUTED”If you have VMs that leverage guest attached volumes off of a single teamed Port Group, you may wish to temporarily create this Port Group under the exact same name so the existing VMs have don’t get confused. Remove this temporary Port Group once you’ve had the opportunity to change the VM’s properties.So there you have it. A simple example of how to install and deploy Dell’s MEM for vSphere 5. Don’t leave performance and ease of management on the shelf. Get MEM installed and running in your vSphere environment.

UPDATE (9/25/2012)

The instructions provided was under the assumption that vSphere 5 was being used. Under vSphere 5.1 and the latest version of MEM, the storage heartbeat is no longer needed. I have modified the post to accommodate, including the link below that references the latest Dell EqualLogic MEM documentation. I’d like to thank the Dell EqualLogic Engineering team for pointing out this important distinction.

Helpful Links

A great summary on the EqualLogic MEM and vStorage APIs

http://whiteboardninja.wordpress.com/2011/02/01/equallogic-mem-and-vstorage-apis/

Comac Hogan’s great post on how the MEM works it’s magic.

http://blogs.vmware.com/vsphere/2011/11/dells-multipath-extension-module-for-equallogic-now-supports-vsphere-50.html

Some performance testing of the MEM

http://www.spoonapedia.com/2010/07/dell-equallogic-multipathing-extension.html

Official Documentation for the EqualLogic MEM (Rev 1.2, which covers vSphere 5.1)

http://www.equallogic.com/WorkArea/DownloadAsset.aspx?id=11000

If only this had been written two years ago when I first started working on this 🙂 So much time spent trying to figure it out the first time. Nice summary and good links. Can’t wait to get on 5.1 You can also use VUM to install the software if you have that in place.

Thanks for the kind words Dave. I had considered mentioning that VUM could be used as well, but was really trying to keep it simple for the reader and relatively short post. Thanks for reading!

When running the configure script above I get the following error:

At line:1 char:89

+ … 00 -nics=vmnic1,vmnic2 -ips=10.130.250.191,10.130.250.192 –

+ ~

Missing argument in parameter list.

+ CategoryInfo : ParserError: (:) [], ParentContainsErrorRecordEx

ception

+ FullyQualifiedErrorId : MissingArgument

Can you explain how to correct this error? Also, do I leave the heartbeat setting out of the script?

Hi David,

It is hard to tell the the error stems from based on what was pasted, but it does seem to be a syntax issue. (take a look at character 89; whatever that might be). What I might suggest is to copy my sample string into something like notepad, then adjust as necessary, followed by pasting that into the command line window. If that doesn’t work, then you can step through the process manually via the first “setup.pl” string shown on step 3.

Leaving the heartbeat out is really only an option for 5.1. It will NOT return good results if you try to work without a storage heartbeat in 5.0 or earlier. I haven’t worked with 5.1 enough to know the nuances yet of transitioning away from a storage heartbeat, so I can’t comment on that quite yet.

>>The assumption that if they don’t have vSphere Enterprise Plus licensing, they can’t use MEM.

Can you add anything on this point? I’ve had Dell MEM working on 5.1 Essentials Plus – but upon running into some latency and IO spikes, no one that I spoke with from Dell or VMware could say it wasn’t caused by limited functionality due to the licensing restriction. We’ve switched to Round Robin and seen small improvements across the board in too small a test sample to really count: http://communities.vmware.com/message/2181679#2181679

Interesting. I might ping you for some more information out of pure curiosity. One option for you to try (if available) is for you to run that ESXi host in Evaluation Mode, so that you can run with the Path Selection Policy of DELL_PSP_EQL_ROUTED. Then re-run the testing to see if you are observing normal/typical performance results. When you were running under your non-EPP licensing, which Path Selection Policy did it choose for you? DM me (at p e t e r . g a v i n at l i v e . c o m ) if you’d like to take this offline

Thanks Pete – we can leave it up here in case it helps the next guy, but let me know if I start embarrassing myself. I might have a go at your test later in the week. I’m curious as well. Formerly DELL_PSP_EQL_ROUTED was the default PSP and had all appearances of normal operation – until we looked into the vmkwarning.log. But actually, I think what we saw there was pretty much normal too, but maybe the kid from vmware hadn’t looked there much himself 😉

I should note that the improvements indicated in the linked IOmeter output don’t appear to correlate to a relief from the latency spikes or, separately, the production-slaying utilization during sVmotion operations (new as of 5.1, it seems to me) for which I opened my investigation.

Were you seeing “naa.[UUID] performance has deteriorated” Messages? I would also be curious to how you are measuring the latency that you are refering to. Have you submitted a case to EqualLogic?

I was seeing those errors… But just checked and saw them again today on one host which drove a 133Gb db bakup folder copy between two of it’s guests. SAN HQ shows a read latency spike (60ms) on the sending volume and write latency (50ms) on the receiving volume. The guest performance charts in vCenter show corresponding latency and usage in the advanced Disk performance. I have been working wirh Tier 2 EQL support. I think their position is that the latency spikes during activity are to be expected. What we’ve been scratching our heads over is “storms” of latency spikes at regular intervals seen in SAN HQ (1hr,1day,7day zoom) of up to 200ms that don’t appear to correspond to significant disk activity.

.

What I’ve noticed is some potentially inaccurate latency data during very quiet times; little to no real activity. I’ve chosen to disregard as algorithmic anomolies because once there is soem activity, it seems to reflect realistic numbers. So here are a couple of ideas. Just ideas here:

1. Letting latency take a back seat for a moment, and perform some large payload actions to see what kind of effective throughput you are getting. Then see if that throughput is affected by the supposed latency incured.

2. Compartmentalize. For instance. If possible, start out with a physical box with a couple of NICs for iSCSI, direct connect them to your switch fabric, and install the HIT/ME. Connect it to a guest attached volume and perform some testing and observations on there (manual, or IOMeter, etc.). Then, once that baseline is established, build out a VM with with a couple of vNICs for guest attached volumes, and install the HIT/ME. Run tests established above, and see if there is a difference.

3. Take a look at other influencing factors. Are jumbos enabled? Are you mixing jumbos and standard frames on those vSwitches? I’ve seen many a problem with using Jumbos unless everything is absolutely perfect. How about the interconnect between your switch stack for your SAN? Is it trunked, or stacked? …What is your SAN switchstack composed of, etc.

That way you can narrow down the focus of this. Feel free to share what you find.

Hi Pete- Thanks for the great post, it really helped me understand things and get MEM working. However, I’ve run into an issue… I installed the latest MEM on ESXi 5.1 and it APPEARS to be functioning normally, but while I have 3 x1GB physical paths from ESXi to SAN, I was only seeing TWO connections logged into a single volume on the EQL PS6100. I opened a case with Dell support and they were insistent that the MEM is NOT supported on vSphere Standard as of 5.1. Can you shed any light on this? Could this actually be what’s keeping me from seeing more than 2 connections to a single volume? I actually DO see three paths to the SAN from ESXi *briefly* after rebooting, but then it drops to two and the EQL log shows one of them simply “logging out.”

I then switched back to round robin without changing anything else, and after a reboot I indeed see ALL THREE paths to SAN and none have “logged out” after at least 10 min (and with DELL_PSP_EQL_ROUTED I would see it drop from 3 to 2 connections within a couple minutes post-reboot). I’m very confused about the whole MEM supported on standard vs. enterprise thing. Even the mem docs themselves say you need Enterprise/Enterprise Plus to run the MEM. Trying to figure out if I’m just running something not supported anymore, or if I somehow have the MEM misconfigured, OR does it have some sort of “smarts” and only creates the connections it NEEDS based on performance?? That’s the only other thing I can think of that would cause this behavior.

Any insights would be greatly appreciated.

Thanks for reading Jim. Many are confused with the licensing regarding MEM. Hopefully I can help clarify matters in an accurate way that will be helpful.

While you are entitled to all software provided by Dell/EqualLogic, (assuming you have a valid support contract) the MEM is host side software, so it is subject to whatever features are on the host OS (e.g. vSphere, etc.). Now, for the vSphere side, the Storage APIs for Multipathing is a feature-set in Enterprise or Enteprise Plus. Dell basically takes the position that how the Storage APIs for Multipathing is enforced, is completely up to VMware. What you might be experiencing are behavioral changes that perhaps vSphere made between 5.0 and 5.1 with respect to handling the storage APIs and their own licensing mechanisms. I have not done any formal testing on what the fallback is when one is using MEM during say, and eval, and you then enter in your vSphere Std licensing keys. Regardless, if you are not licensed to use the advanced pathing policy, you will need to change this to Round Robin

Here are a comparison of editions: http://www.vmware.com/in/products/datacenter-virtualization/vsphere/compare-editions.html

For further information on the MEM, look at the EqualLogic TR1074

Thanks for the additional insight, Pete! That helps. One thing I’m still not 100% clear on is if this is a change in 5.1 (and MEM *was* supported on standard prior to that), or if I’m misunderstanding your bullet point at the beginning of this blog where you say

“The assumption that if they don’t have vSphere Enterprise Plus licensing, they can’t use MEM.”

Was this something that used to work on standard and is now “broken” due to new vSphere 5.1 licensing, or am I mis-interpreting your statement that users of non-Enterprise vSphere can still use the MEM portion? Dell claims it was NEVER supported on standard, so as you can see I’m still a wee bit confused 😉

Yes, it is a misunderstanding. The point I was trying to make regarding MEM and non-EPP customers, is that one can still use it to configure and standardize creating your vSwitch and vmkernel storage configuration (if you do this a lot, you may notice it is time consuming, with a lot of opportunity to overlook something). You just don’t get the benefit of the path policy. Dell is correct in that at no point was a non EPP license allowed to use, or could have used the special DELL_PSP_EQL_ROUTED path policy, regardless of version. But the MEM package itself (the software that helps you configure everything) can be used without issue. You just need to use Round Robin.

Thanks SO much for the additional clarification. It all makes sense now. And yes, I did use the mem installer to configure my iSCSI and it was never easier! Can’t imagine doing it any other way, now.

Just wanted to follow up in case anyone comes upon this thread and finds the info useful. I kept being nagged by the contradiction that people were saying you can’t use the MEM (except for install/configure of iSCSI) on anything but vSphere Enterprise/E+ yet I DID have it configured and working on vSphere standard, albeit with only two connections to the SAN.

Well, I read Dell TR1074 you reference above and it all finally made sense! While I had 3x 1GB iSCSI connections from host-to-SAN, BY DEFAULT the MEM only sets up TWO CONNECTIONS PER VOLUME, the assumption being that you will have multiple group members and the volume will be spanned across multiple arrays, giving a (default) total of 6 connections per volume across all members.

I was observing only two connections because it was the ONLY volume I had created on the array thusfar… as soon as I created more volumes, I saw that while each got only two connections, ALL of the iSCSI paths from the host were being utilized and balanced-across. Duh! I further read that you can in fact increase the default “membersessions” in the MEM to something that will force all iSCSI paths to be utilized against a single volume (most likely in a single member scenario) but they caution that this is typically only done in lab setups where you’re trying to benchmark the SAN and need all connections active to a single volume, and in production you likely won’t see a benefit.

Anyway, all that is to say that I have no idea WHY the MEM installed and runs perfectly on my vSphere standard hosts, but it appears to contradict Dell support and TR1074 itself when they claim you need Enterprise/+… And I’m sure it’s actually working, too… When I observe Manage Paths on a datastore I see DELL_PSP_EQL_ROUTED as the path type and VMW_SATP_EQL as the storage array type… and now that I understand the default two-connection-per-volume thing, I can see that it’s behaving exactly as it should. Freaky.

Hey, thanks for this post. I’m going to be installing ESXi 5.1 on some new hosts in a few weeks and will need to get MEM up and running. This step-by-step post is fantastic as we had a hell of a time muddling thru the process in 4.1. I really appreciate you taking the time to put this together.

Thank you Chuck! It is always rewarding to hear that the posts are helping someone out.

– Pete

Mind if I bounce a couple questions off you? Researching the MEM install has left me with a few things that documentation doesn’t really cover.

First, all the docs I see show 2 NIC’s being used for multipathing. Is there any issue with using more NIC’s than that? I don’t think we’ll be approaching the 1024 session limit in our environment, so I’m not too worried about it. Are there any performance or other concerns I should be looking for? Is 3+ NIC’s a supported configuration?

Second, I need to run thru the installer on each host individually, right? I can’t just do it on one host, then apply a host profile to the remaining hosts, right? I’m guessing the host profile functionality would only recreate the vswitch, but probably wouldn’t properly bind & install the MEM module.

Thanks for your time and any thoughts!

You certainly may have more than two uplinks, but based on what I’m hearing you say about your environment, I would recommend an initial design/deployment that focuses on the typical things (needs, resiliancy, etc.). In other words, no need to add complexity just because you can.

Yes, you will need to run through the process described in the post on each host.