A typical conversation with one of our Developers goes like this. “Hey, that new VM you gave us is great, but can you make it say, 10 times faster?” Another day, and another request by our Development Team to make our build infrastructure faster. What is a build infrastructure, and what does it have to do with vSphere? I’ll tell you…

Software Developers have to compile, or “build” their source code before it is really usable by anyone. Compiling can involve just a small bit of code, or millions of lines. Developers will often perform builds on their own workstations, as well as designated “build” systems. These dedicated build systems are often part of a farm of systems that are churning out builds by fixed schedule, or on demand. Each might be responsible for different products, platforms, versions, or build purposes. This can result in dozens of build machines. Most of this is orchestrated by a lot of scripting or build automation tools. This type of practice is often referred to as Continuous Integration (CI), and are all driven off of Test Driven Development and Lean/Agile Development practices.

In the software world, waiting for builds is wasting money. Slower turn around time, and longer cycles leave less time or willingness to validate that changes to the code didn’t’ break anything. So there is a constant desire to make all of this faster.

Not long after I started virtualizing our environment, I demonstrated the benefits of virtualizing our build systems. Often times the physical build systems were on tired old machines lacking uniformity, protection, revision control, or performance monitoring. That is not exactly a desired recipe for business critical systems. We have benefited in so many ways with these systems being virtualized. Whether it is cloning a system in just a couple of minutes, or knowing they replicated offsite without even thinking about it.

But one problem. Code compiling takes CPU. Massive amounts of it. It has been my observation that nothing makes better use of parallelizing with multiple cores better than compilers. Many applications simply aren’t able to multi-thread, while other applications can, but don’t do it very well – including well known enterprise application software. Throw the right command line switch on a compiler, and it will peg out your latest rocket of a workstation.

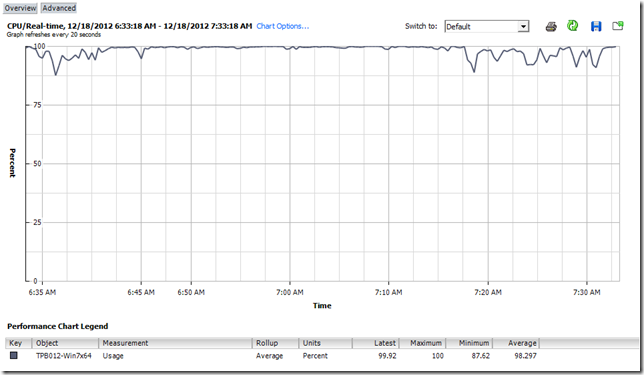

Take a look below. This is a 4vCPU VM. That solid line pegged at 100% nearly the entire time is pretty much the way the system will run during the compile. There are exceptions, as tasks like linking are single threaded. What you see here can go on for hours at a time.

This is a different view of that same VM above, showing a nearly perfect distribution of threading across the vCPUs assigned to the VM.

So, as you can see, the efficiency of the compilers actually present a bit of a problem in the virtualized world. Lets face it, one of the values virtualization provides is the unbelievable ability to use otherwise wasted CPU cycles for other systems that really need it. But what happens if you really need it? Well, consolidation ratios go down, and sizing becomes really important.

Compiling from source code can involve handling literally millions of little tiny files. You might think there is a ton of disk activity. There certainly can be I/O, but it is rarely disk bound. This stuck out loud and clear after some of the Developer’s physical workstations had SSDs installed. After an initial hiccup with some bad SSDs, further testing showed almost no speed improvement. Looking at some of the performance data on those workstations showed that SSDs had no affect because the systems were always CPU bound.

Even with the above, some evidence suggests that the pool of Dell EqualLogic arrays (PS6100 and PS600) used in this environment were nearing their performance thresholds. Ideally, I would like to incorporate the EqualLogic hybrid array. The SSD/SAS combo would give me the IOPS needed if I started running into I/O issues. Unfortunately, I have to plan for incorporating this into the mix perhaps a bit later in the year.

RAM for each build system is a bit more predictable. Most systems are not memory hogs when compiling. 4 to 6 Gigabytes of RAM used during a build is quite typical. Linux has a tendency to utilize it more if it has it available, especially when it comes to file IO.

The other variable is the compiler. Windows platforms may use something like Visual Studio, while Linux will use a GCC compiler. The differences in performance can be startling. Compile the exact same source code on two machines with the exact same specs, with one running Windows/Visual Studio, and the other running Linux/GCC, and the Linux machine will finish the build in 1/3rd the time. I can’t do anything about that, but it is a worthy data point when trying to speed up builds.

The Existing Arrangement

All of the build VMs (along with the rest of the VMs) currently run in a cluster of 7 Dell M6xx blades inside a Dell M1000e enclosure. Four of them are Dell M600s with dual socket, Harper Town based chips. Three others are Dell M610s running Nehalem chips. The Harper Town chips didn’t support hyper threading, so in vSphere, that means it will see just a total of 8 logical cores. The Nehalem based systems show 16 logical cores.

All of the build systems (25 as of right now, running a mix of Windows and Linux) run no greater than 4vCPUs. I’ve held firm on this limit of going no greater than 50% of the total physical core count of a host. I’ve gotten some heat from it, but I’ve been rewarded with very acceptable CPU Ready times. After all, this cluster had to support the rest of our infrastructure as well. By physical workstation standards (especially expensive Development workstations), they are pathetically slow. Time to do something about it.

The Plan

The plan is simple. Increase CPU resources. For the cluster, I could either scale up (bigger hosts) or scale out (more hosts). In my case, I was really limited on the capabilities on the host, plus, I wanted to refrain from buying more vSphere licenses unless I had to, so it was well worth it to replace the 4 oldest M600 blades (using Intel Harper Town chips). The new blades, which will be Dell M620s, will have 192GB of RAM versus just 32GB in the old M600s. And lastly, in order to take advantage of some of the new chip architectures in the new blades, I will be splitting this off into a dedicated 4 host cluster.

| New M620 Blades | Old M600 Blades | |

| Chip | Intel Xeon E5-2680 | Intel Xeon E5430 |

| Clock Speed | 2.7GHz (or faster) | 2.66GHz |

| # of physical cores | 16 | 8 |

| # of logical cores | 32 | 8 |

| RAM | 192 GB | 32 GB |

The new blades will have dual 8 core Sandy Bridge processors, giving me 16 physical cores, and 32 logical cores with hyper threading for each host. This is double the physical cores, and 4 times the logical cores against the older hosts. I will also be paying the premium price for clock speed. I rarely get the fastest clock speed of anything, but in this case, it can truly make a difference.

I have to resist throwing in the blades and just turning up the dials on the VMs. I want to understand to what level I will be getting the greatest return. I also want to see to what level does the dreaded CPU Ready value start cranking up. I’m under no illusion that a given host only has so many CPU cycles, no matter how powerful it is. But in this case, it might be worth tolerating some degree of contention if it means that the majority of time it finishes the builds some measurable amount faster.

So how powerful can I make these VMs? Do I dare go past 8 vCPUs? 12 vCPUs? How about 16? Any guesses? What about NUMA, and the negative impact that might occur if one goes beyond a NUMA node? Stay tuned! …I intend to find out.

Sound like you’re going to have some fun Pete :D. When will you start the upgrade? Hehehe, I’m really curious about how everything will turn out.

Thanks for reading Rafael. I’m hoping to have them purchased and up by the end of January.

Looking forward to see how this ends up. Are you running vCOPs? Might help give you some more performance data. If you are current on support the basic version is now included if you have vSphere Enterprise. I’ve had lots of people tell me not to use multiple vCPUs unless absolutely necessary. It really is a black art that includes lots of monitoring to be able to know exactly when it is and isn’t a good time to have multiple CPUs. Too bad there isn’t a “core on demain” technology that could add and remove these as multi-threaded applications needed it. I’m sure it will come someday.

Not running vCOPs at the moment. Will introduce that when I go up to 5.1. Will stick to the typical approaches to monitoring for the time being. What is really interesting in this particular use case is that some level of contention might be acceptable depending on how much, and the pay-off from dialing up the systems higher. It should be fun regardless. Thanks for reading Dave!