Implementing new technology that solves real problems is great. It is exciting, and you get to stand on the shoulders of the smart folks who dreamed up the solution. But with all of that glory comes new design and operation elements that may have been introduced. This isn’t a bad thing. It is just different. The magic of virtualization didn’t excuse the requirement of needing to understand the design and operational considerations of the new paradigm. The same goes for implementing host based caching in a virtualized environment.

Implementing FVP is simple and the results can be impressive. For many, that is about all the effort they may end up putting into it. But there are design considerations that will help maximize the investment, and minimize false impressions, or costly mistakes. I want to share what has been learned against my real world workloads, so that you can understand what to look for, and possibly how to get more out of your investment. While FVP accelerates both reads and writes, it is the latter that warrants the most consideration, so that will be the focus of this post.

When accelerating storage using FVP, the factors that I’ve found to have the most influence on how much your storage I/O is accelerated are:

- Interconnect speed between hosts of your pooled flash

- Performance delta between your flash tier, and your storage tier.

- Working set size of your data

- Duty cycle write I/O profile of your VMs (including peak writes, and duration)

- I/O size of your writes (which can vary within each workload)

- Likelihood or frequency of DRS or manual vMotion activities

- Native speed and consistency of your flash (the flash itself, and the bus speed)

- Capacity of your flash (more of an influence on read caching, but can have some impact on writes too)

Write-back caching & vMotion

Most know by now that to guard against any potential data loss in the event of a host failure, FVP provides redundancy of write-back caching through the use of one or more peers. The interconnect used is the vMotion network. While FVP does a good job of decoupling the VM’s need to wait for the backing datastore, a VM configured for write-back with redundancy must acknowledge the write I/O of the VM from it’s local flash, AND the one or more peers before it returns the write ACK to the VM.

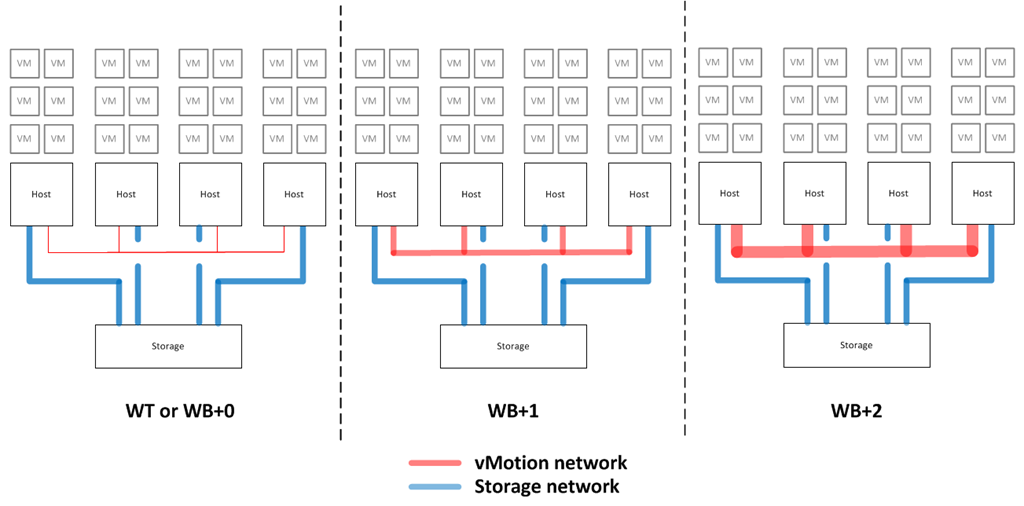

What does this mean to your environment? More traffic on your vMotion network. Take a look at the image below. In a cluster NOT accelerated by FVP, the host uplinks that serve a vMotion network might see relatively little traffic, with bursts of traffic only during vMotion activities. That would also be the case if you were running FVP in write-back mode with no peers (WB+0). This image below is what the activity on the vMotion network looks like as perceived by one of the hosts after the VMs had write-back with redundancy of one peer. In this case the writes were averaging about 12MBps across the vMotion network. You will see that the spike is where a vMotion kicked off: The spike is the peak output of a 1GbE interface; about 125MBps.

Is this bad that the traffic is running over your vMotion network? No, not necessarily. It has to run over something. But with this knowledge, it is easy to see that bandwidth for inter-server communication will be more important than ever before. Your infrastructure design may need to be tweaked to accommodate the new role that the vMotion network plays.

Can one get away with a 1GbE link for cross server communication? Perhaps. It really depends on the factors above, which can sometimes be hard to determine. So with all of the variables to consider, it is sometimes easiest to circle back to what we do know:

- Redundant write back caching with FVP will be using network connectivity (via vMotion network) for every single write that occurs for an accelerated VM.

- Redundant write back caching writes are multiplied by the number of peers that are configured per accelerated VM.

- The write accelerated I/O commit time (latency) will be as fast as the slowest connection. Your vMotion network will likely be slower than the local bus. A poor quality SSD or an older generation bus could be a bottleneck too.

- vMotion activities enjoy using every bit of bandwidth it has available to it.

- VM’s that are committing a lot of writes might also be taxing CPU resources, which may kick in DRS rules to rebalance the load – thus creating more vMotion traffic. Those busy VMs may be using more active memory pages as well, which may increase the amount of data to move during the vMotion process.

The multiplier of redundancy

Lets run through a simple scenario to better understand the potential impact an undersized vMotion network can have on the performance of write-back caching with redundancy. The example is addressing writes only.

- 4 hosts each have a group of 6 VM’s that consistently write 5MBps per VM. Traditionally, these 24 VMs would be sending a total of 120MBps to the backing physical storage.

- When write back is enabled without any redundancy (WB+0), the backing storage will still see the same amount of writes committed, but it will be in a slightly different way. Sequential, and smoothed out as data is flushed to the backing physical storage.

- When write back is enabled and a write redundancy of “local flash and 1 network flash device” (WB+1) is chosen, the backing storage will still see 120MBps go to it eventually, but there will be an additional 120MBPs of data going to the host peers, traversing the vMotion network.

- When write back is enabled and a write redundancy of “local flash and 2 network flash devices” (WB+2) is chosen, the backing storage will still see 120MBps to it, but there will be an additional 240MBps of data going to the host peers, traversing the vMotion network.

The write-back redundancy configuration is a per-VM setting, so there not necessarily a need to change them all to one setting. Your VMs will most likely not have the same write workload either. But this is to illustrate the point that as the example shows, it is not hard to saturate a 1GbE interface. Assuming an approximate 125MBps on a single 1GbE interface, under the described arrangement, saturation would occur with each VM configured for write-back with redundancy of one peer (WB+1). This leaves little headroom for other traffic that might be traversing that network, such as vMotions, or heartbeats.

Fortunately FVP has the smarts built in to ensure that vMotion activities and write-back caching get along. However, there is no denying the physics associated with the matter. If you have a lot of writes, and you really want to leverage the full beauty of FVP, you are best served by fast interconnects between hosts. It is a small price to pay for supreme performance. FVP might expose the fact that 1GbE not be ideal in an accelerated environment, but consider what else has changed over the years. Standard memory sizes of deployed VMs have increased significantly (The vOpenData Public Dashboard confirms this). That 1GbE vMotion network might have been good for VM’s with 512MB of RAM, but what about those with 4, 8, or 12GB of RAM? That 1GbE vMotion network has become outdated even for what it was originally designed for.

Destaging

One characteristic unique with any type of write-back caching is that eventually, the data needs to be destaged to the backing physical datastore. The server-side flash that is now decoupled from the backing storage has the potential to accommodate a lot of write I/Os with minimal latency. One may or may not have the backing spindles, or conduit large enough to be sending your write I/O to the backing physical storage if this high write I/O lasts long enough. Destaging issues can occur on an arrangement like FVP, or with storage arrays and DAS arrangements that front performance I/O with flash that get pushed to slower spindles.

Knowing the impact of this depends on the workload and the environment it runs in.

- If the duty cycle of the write workload that is above the physical storage I/O limit allows for enough “rest time” (defined as any moment that the max I/O to the backing physical storage is below 100%) to destage before the next over commitment begins, then you have effectively increased your ability to deliver more write I/Os with less latency.

- If the duty cycle of the write workload that is above the physical storage I/O limit is sustained for too long, the destager of that given VM will fill to capacity, and will not be able to accelerate any faster than it’s ability to destage.

Huh? Okay, a picture might be a better way to describe this. The callouts below point to the two scenarios described.

So when looking at this write I/O duty cycle, there becomes a concept of amplitude of the maximum write I/O, and frequency of those times in which is it overcommitting. When evaluating an environment, you might see this crude sine-wave show up. This write I/O duty cycle, coupled with your physical components is the key to how much FVP can accelerate your environment.

What happens when the writes to the destager surpass the ability of your backing storage to keep up with the writes? Once the destager for that given VM fills up, it’s acceleration will reduce to the rate that it can evacuate the data to the backing storage. One may never see this in production, but it is possible. It really depends on the factors listed at the beginning of the post. The only way to clearly see this is from a synthetic workload, where I show it was able to push 5 times the write I/Os (blue line) before eventually filling up the destager to the point where it was throttled back to the rate of the datastore (purple line)

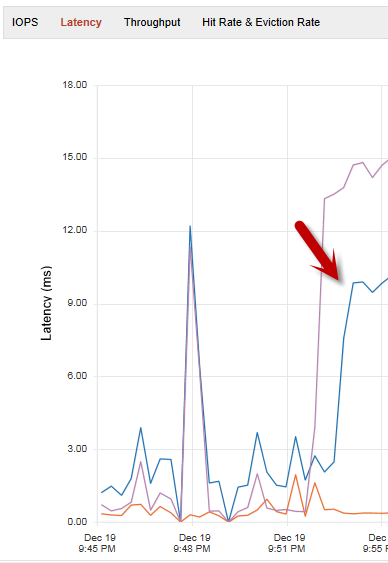

This will have an impact on the effective latency, shown below (blue line). While the destager is full, it will not be able to fulfill the write at the low latency typically associated with flash, reflecting latency closer to the backing datastore (purple line).

Many workloads would never see this behavior, but those that are very write intensive (like mine), and that have a big delta between their acceleration tier and their backing storage may run into this.

The good news is that workloads have a tendency to be bursty, which is a perfect match for an acceleration tier. In a clustered arrangement, this is much harder to predict, and bursty can be changed to steady-state quite quickly. What this demonstrates is that if there is enough of a performance delta between your acceleration tier, and your storage tier, under cases of sustained writes, there may be times where it doesn’t have the opportunity to flush enough writes to maintain it’s ability to accelerate.

Recommendations

My recommendations (and let me clarify that these are my opinions only) on implementing FVP would include.

- Initially, run the VMs in write-through mode so that you can leverage the FVP analytics to better understand your workload (duty cycles, read/write ratios, maximum write throughput for a VM, IOPS, latency, etc.)

- As you gain a better understanding of the behavior of these workloads, introduce write-back caching to see how it helps the systems changed.

- Keep and eye on your vMotion network (in particular, those with 1GbE environments and limited physical ports) and see if one ever comes close to saturation. Other leading indicators will be increased latency on accelerated writes.

- Run out and buy some 10GbE NICs for your vMotion network. If you are in a situation with a total 1GbE legacy fabric for your SAN, and your vMotion network, and perhaps you have limits on form factors that may make upgrading difficult (think blades here), consider investing in 10GbE for your vMotion network, as opposed to your backing storage. Your read caching has probably already relieved quite a bit of I/O pressure on your storage, and addressing your cross server bandwidth is ultimately a more affordable, and simpler task.

- If possible, allocate more than one link and configure for Multi-NIC vMotion. At this time, FVP will not be able to leverage this, but it will allow vMotion to use another link if the other link is busy. Another possible option would be to bond multiple 1GbE links for vMotion. This may or may not be suitable for your environment.

So if you haven’t done so already, plan to incorporate 10GbE for cross-server communication for your vMotion Network. Not only will your vMotioning VM’s thank you, so will the performance of FVP.

– Pete

Helpful links:

Fault Tolerant Write acceleration

http://frankdenneman.nl/2013/11/05/fault-tolerant-write-acceleration/

Destaging Writes from Acceleration Tier to Primary Storage

http://voiceforvirtual.com/2013/08/14/destaging-writes-i/

Pete, thanks for sharing your insights on FVP. I’ve learned a lot from your blog posts.

I have a question I hope you’re willing to answer.

I believe I have a similar environment to you where our shop does a lot of build/test in nightly builds (~12 hours currently before FVP). In designing upgrades to our system, I’m considering adding several low-end SAN devices (Synology Rackstation RS214) with a single Intel S3700 SSD. Then, on our build/test VMs, we create a ‘scratch’ disk on the VM that is non-persistent and uses the “cheap-SAN” devices for storage. The ‘scratch’ disk would be the location where checkout/compile occurs. The actual artifacts of the build are then copied to a location with redundancy using Jenkins.

For the build/test VMs, I plan to have FVP write-back redundancy set to zero. If something fails, we rebuild/retest. I’m thinking this would avoid bottlenecks on the LAN and SAN for our build/test needs. Does this seem like a reasonable plan?

Hello Dale. First, thanks for reading. Those are some really great questions you ask, so let me do my best at answering them in the detail that they deserve.

There are a few factors that will affect the answer, such as the type of compiling that is being performed, the platform, the code itself, and then of course your existing infrastructure (# of hosts, fabric, etc.). If you do not want to provide these in a public manner, DM me, and I’ll craft my message to be more specific.

It’s hard to tell what you mean by “several” low end SAN devices, as I do not know the topology of your arrangement, but I do have quite a bit of experience with the Synology units, both in production at work, and in my home lab. They are a very nice, affordable unit that can suite certain purposes very well. I really like them. What they currently struggle with is a poor implementation of an iSCSI stack, and not very good multipathing. They do very well using NFS, but at this time FVP does not accelerating backing NFS datastores. What I would recommend is that even though the “scratch” data for your environment can be considered expendable, I suspect the VM’s are very important to you. So I would plan for a storage array that would meet the fault tolerant requirements that you’d normally see in a storage solution (RAID10 or RAID6, redundant NICs, redundant power, etc.) I would hate for you to get into a situation in which a change in scope occurs, and all of a sudden you have “bet the business” critical system running on a scattering of poorly planned, non-redundant storage.

In the scenario that you describe, while one could in theory, have a Synology unit with just 2 bays in it. However, if you have one with an SSD, then that means a couple of things. You have data “at rest” on non redundant drives. You’d also have a situation in which the drive would have far more bandwidth that than the uplink to the unit, thereby partially defeating the purpose of the drive

The challenge to me (from my perspective) when it comes to builds is the strategic approach on how to handle the source code. Depending on what you choose, it can alter how efficient your compiling is. You can have fast storage, but workflows that say, checkout the code a lot will expose the inefficiencies of traditional file transport mechanisms (SVN checkouts, file copies, via remote file share/mount to local).

Having the build/test VMs set to WB+0 is okay. In fact, it is what I used to do, as I took the same position in that if there was some host failure, one would just run the compile again. However, one has to have a rock solid recovery scenario, where in the event of a host failure, the VM generating the build might be left in a stalled state, and may not come up. Your scratch data may be considered expendable, but the system that generates it may not. Since the caching policy is set per VM, and not per VMDK inside of a VM, this is something that you will want to keep in mind. Ultimately I decided to pull back from a WB+0 in this specific case because my RTO’s were tight enough that it was better for me to run WB+1.

So in summary, my approach would be to stick with going with FVP and a good SSD in each host (acting as the storage accelerator for data I/O in motion), then look at what your options and budget might be for a storage array that would serve all of your hosts via a shared storage fabric for the data at rest. This is not the only way to do it, but the one that I’d probably recommend for your situation. Feel free to reach out to me if you’d like to discuss further.

Hi Pete,

Thanks for your reply. I’ll do my best to respond to your points. Sorry for my slow reply.

> There are a few factors that will affect the answer, such as the

> type of compiling that is being performed, the platform, the code itself,

> and then of course your existing infrastructure (# of hosts, fabric, etc.)

We have a fairly simple setup.

— Current configuration —

VMware Essentials

(3) ESXi Servers 2x 6-Core CPUs, ~200GB RAM, 2-4 NICs bonded

(2) ZFS file servers with mostly RAID-10 arrays. 2-4 bonded NICs

1Gb ports on network switch

— Near future upgrade —

VMware vSphere with Operations Manager

(6) ESXi Servers 2x 10-Core CPUs, 256GB RAM, 6x 1Gb NICs, 1x 400GB Intel S3700

PernixData FVP

(2) ZFS file servers with mostly RAID-10 arrays. 2-4 bonded NICs (no change)

1Gb ports on network (new network, but still 1Gb, sigh…)

Planned network setup like p.176 of “VMware vSphere Design” for 6x NICs. All 1Gb connections (ironic given the topic of this post, I know)

Nearly all our VMs are linked clones. We first create a VM using our “gold” datastore (on Intel 510 SSDs in RAID-5). Then, we create a linked clone with with OS on the “gold” datastore but all the deltas/snapshots are put on spinning disk “silver” (10k SAS) or “bronze” (7200 SATA) datastores that are typically 8-10 disks in RAID-10.

— Longer term upgrade —

10Gb NICs/switches between ESXi hosts and ZFS datastores.

Some suitably fast storage (FVP will help determine requirements)

> I would hate for you to get into a situation in which a change in scope occurs,

> and all of a sudden you have “bet the business” critical system running on a

> scattering of poorly planned, non-redundant storage.

Good point.

> The challenge to me (from my perspective) when it comes to builds is the strategic

> approach on how to handle the source code. Depending on what you choose, it can alter

> how efficient your compiling is. You can have fast storage, but workflows that say,

> checkout the code a lot will expose the inefficiencies of traditional file transport

> mechanisms (SVN checkouts, file copies, via remote file share/mount to local).

Yes, more work to determine. :-). We currently do full SVN checkouts each night (not fast now) since the Jenkins workspace is deleted prior to build. Copying artifacts also occurs and likely has the performance issues you mention. Not performant, but “safe”.

My idea is to create separate “scratch” virtual disk on each build/test VM. This will be where our Jenkins workspaces are located. The “scratch” disk would be non-persistent (since we don’t want/need the contents of this disk after VM is reverted). Since the data on the “scratch” disk is non-persistent, short-lived, and easily recreated, I’m willing to be less “safe” with the datastore where this resides.

> Your scratch data may be considered expendable, but the system that generates it may not.

Yes. The OS/tools reside on a virtual disk that uses a datastore on our ZFS fileserver with some level of RAID. If/when things go awry, we revert to snapshot.

> Since the caching policy is set per VM, and not per VMDK inside of a VM, this is

> something that you will want to keep in mind.

I thought that caching policy could also be set per datastore (really need to get FVP installed…). If so, my plan is WB+0 for the datastore used for “scratch” disks.

Here are datastore options I’m considering for the “scratch” disk;

1) 3x Synology RackStation with 1x 400GB Intel S3700 (about $1500 each)

2) 5x Intel S3700 in RAID-5 on our ZFS file server (and upgrade NIC to 10Gb)

3) Install Intel S3700 on ESXi host and use as local storage.

#1 helps prevent networking from being a bottleneck to the storage, but I don’t know for sure the iSCSI performance to expect from this option. This was my “main” idea. But your comments of

> What they currently struggle with is a poor implementation of an iSCSI stack,

> and not very good multipathing.

and

> You’d also have a situation in which the drive would have far more bandwidth that than the

> uplink to the unit, thereby partially defeating the purpose of the drive

make this option seem a bit suspect.

#2 is “safest”, but likely has bottleneck in network between ESXi hosts and ZFS file servers. This may be the way to go as it would dove-tail best to a future 10Gb network upgrade.

#3 is best performance, simplest, but VMs can no longer be vMotion-ed. As long-time “VMware Essentials” (without vMotion) customers, lack of vMotion will not be something we miss. But, I’d rather not limit the flexibility of the VMs if possible.

> So in summary, my approach would be to stick with going with FVP and a good SSD in each host

Check. My understanding is the S3700 is what the PernixData guys use.

> storage array that would serve all of your hosts via a shared storage fabric for the data at rest

Yes, this is the real solution. I’ve (finally) realized that messing around with other options is just goofy.

I’ll recommend that we upgrade the network between ESXi hosts and file servers and add higher performing storage as we better understand bottlenecks by using FVP.

Thanks again for sharing your experience. I’m looking forward to working with FVP.

Dale

Hey Dale,

I’d like to butt in a little here and talk about Synology devices and host-based caching. I primarily use Autocache by Proximal Data (disclosure: Proximal Data is a client of mine), but I have used Pernix’s FVP, SanDisk’s FlashSoft, VMware’s vFlash and a few others. (Basic rundown here: http://www.theregister.co.uk/2013/10/18/flash_cache_2013/). As such I’d like to offer a few tips and pointers. Take with appropriate NaCl.

The first thing I’d like to say is that host-based write caching and low or mid-range NASes or SANs isn’t the best of ideas. The short version is that I’ve had corruption (typically when there’s a power-failure, someone pulls the wrong network cable, etc.)

I’ve had issues with backups, too. There exists data in the write cache on the host that isn’t on the NAS/SAN. If you are relying on the innate capabilities of the NAS/SAN to take a snapshot for backup purposes (as I do with my Synology units,) then you are pretty boned. You can get around it with some scripting of the host-based write caches (which trigger flushing of data before a backup), but not everything is easily scriptable (such as those Synology units,) and flushing the cache can take /forever/, especially under load.

I’ve also run into corruption where I’m relying on the RAID+RAIN setup that Synology provides to achieve my HA. Data in host cache won’t always flush to the new unit (a bug I expect will be fixed in due time, but which has cost me a few templates.)

The host-based write caching solutions available today are designed for enterprises. They are another tier of storage and they /must/ be treated as such. This requires a complete rearchitecting of your storage solution from transport to backups. Don’t even bother trying write caching unless you’re working with enterprise devices, enterprise budgets and enterprise redundancy, across everything from power to networking.

Write caches aren’t exactly forgiving.

That said, everyone has a read cache, so you’re spoilt for choice here. VMware, Sandisk, Pernix, Proximal…and they all work! I prefer Proximal’s over the rest, (big surprise, eh?) though I do like to think that isn’t because they’re paying me.

I use NFS a lot, so support for both NFS and iSCSI is critical. My lab results have also shown that for my production workloads Proximal has consistently accelerated more IOPS than the others. Given that I seem constantly starved for IOPS, that starts to matter.

That IOPS advantage could be one of two things, and the truth of the matter is I that don’t know enough about the gubbins inside each of the products to determine which. Either Proximal wrecks the competition at read caching for my workloads because their algorithms are better or they win because they have this “global cache” thing that basically means I don’t have to pick a set amount of flash and assign it per VM.

To be honest, it’s probably a combination of both reasons. The simple truth on this is that despite having been immersed in the flash caching scene for the past year, I /still/ don’t know enough to tell you what is the “optimal” amount of flash for my workloads.

Based on Autocache’s constant resizing of the cache, the “optima amount” seems to vary not only per workload, but per time of day. (Assuming that Autocache’s constant resizing of the amounts is in fact the application finding the optimal amount.) This is why I’m especially not fond of VMware’s current implementation. (Well, that and it adds a dimension of having to start worrying about “do I have enough flash on the destination server” that doesn’t appeal to the lazy sysdamin side of me.)

All of the contenders work just fine with Synology when I am using them as read caches…but that isn’t to say things are all roses and happiness. You need to understand that read cache is like a drug. It’s dangerously addictive…and cold turkey can kill you.

In one of my production environments I have a pair of Synology units in RAID 5 + RAIN 1 serving 8 hosts. 6 of these hosts are outfitted with an Intel 520 480GB SSD. Everything works just fine, no issues…until I stick my great big nose in there and do something stupid.

I was trying to update Autocache one day and succeeded in breaking the Autocache manager VM. I killed Autocache across all 6 units that had it installed and *bam* just like that all the I/O that was being cached was suddenly hitting the Synolohgy cluster.

Long story short, Autocache was taking so much load off the Synology that when all nodes were switched off like that the Synology cluster folded like a cheap tent. I had completely oversubscribed the I/O of the cluster and as soon as the cache was off I was hosed.

All of the above is a really long-winded way of saying a few simple things.

1) Don’t use host-based write caching with low-end or midrange SANs.

2) You can use read caching with just about anything.

3) You’ll probably be shocked by how good “plain vanilla read caching is.”

4) Be aware that write caching brings new risks and concerns that you must architect around.

5) Make damned sure your storage can survive either write or read caching getting switched off without simply collapsing under the strain.

For your specific setup, if you’re trailing FVP – or have already purchased it – then talk to PernixData about using it strictly as a read cache. If you’ve not purchased yet, look at all the read caches from all the vendors.

Good luck, and don’t be discouraged by people pooh-poohing commodity storage. I run dozens of commodity storage setups from the homebuilt ZFS stuff to Starwind SAN to Nexenta through to Synology and Western Digital’s Sentinel line. I’ve also got Dells, HP and so forth floating about.

Host-based caching is a great solution to help deal with IOPS constraints in these environments, no matter whose solution you use. Find the one that’s right for you and I promise, you’ll never look back. 🙂

Cheers!

PernixData is going to support NFS in the next version, and also adding other newer features – network compression to reduce traffic on your vMotion network. Read them up, I think it is a great soution

You scare the junk out of me for doing a PernixData write-caching demo. We have 3 PowerEdge hosts in our production cluster with FusionIO drives and NetApp storage behind them. Sounds Enterprise to me 🙂 The only part that freaks me out is a power outage (our building has battery backup long enough for the generator to kick in) or backups. We use snapshots so we’ll have to stop the services, flush the cache and then do a snapshot but sounds like you’re thinking it might take a long time? Has your opinion changed since the latest versions have come out?

Thanks for reading. There are a lot of variables that factor in to how quickly the data is flushed or destaged. They are not concerns, so much as operational factors that should be considered. But with nice FIO drives for caching, and I’m assuming a decent NetApp backing the cluster, it should destage pretty quickly. Most importantly, it will absorb what would otherwise be your latency spikes that occur in production. As far as bakups, you won’t have to do any stopping of services, etc. For instance, with Veeam, which also uses VMware’s snapshotting mechanism, you simply set a special setting in FVP that will instruct the VM’s being backed up to flush their write cache to the backing storage. …Not a big deal, and it works quite well. All of that is scriptable too via PowerShell. But if you are hesitant, remember that you can establish caching policy changes on a per VM basis. I’ve been operational since the pre-GA beta days, and all is going quite well. I’m absolutely thrilled with the features coming with the next major release, as they address some of my specific use cases.

Thanks for the heads up. We have one of their sales engineers who’s very experienced with FIO and NetApp helping us set up the demo. He said he’s be setting up the PowerShell script with us so sounds like he’s on the same wavelength. I feel better now 🙂

I know several of their SE’s, and they are all top notch. You are in good hands. Good luck.