Hyper-converged infrastructures (HCI) can bring several design and operational benefits to the table, adding to the long list of reasons behind its popularity. Yet, HCI also introduces new considerations in understanding and measuring technical costs associated with the architecture. These technical costs could be thought of as a usage “tax” or “overhead” on host resources. The amount attributed to this technical cost can vary quite drastically, and depends heavily on the architecture used. For an administrator, it can be a bit challenging to measure and understand. The architecture used by HCI solutions should not be overlooked, as these technical costs can not only influence the performance and consistency of the VMs, but dramatically impact the density of VMs per host, and ultimately the total cost of ownership.

With HCI, host resources (CPU, memory, and network) are now responsible for an entirely new set of duties typically provided by a storage array found in a traditional three-tier architecture. These responsibilities not only include handling VM storage I/O from end to end, but due to the distributed nature of HCI, hosts will take part in storage activity of VMs not local to the host, such as replicated writes of a VM, as well as data at rest operations and other services related to storage. These responsibilities consume host resources. The question is, how much?

This multi-part series is going to look at the basics of HCI architectures, and how they behave differently with respect to their demands on CPU, memory, and network resources. Operational comparisons are not covered in this series simply to maintain focus on the intent of this series.

"Storage efficiency" is more than what you think

The term "storage efficiency" is commonly associated with just data deduplication and compression. With hyper-converged infrastructures, this term takes on additional meaning. Storage efficiency in HCI relates to the efficiency of how I/Os are delivered to and from the VM. Efficiency of I/O delivery to and from VMs matter not only from performance and consistency as seen by the VM, but how much resource usage is introduced to the hosts in the cluster. The latter is often never considered, yet extremely important.

HCI Architectures

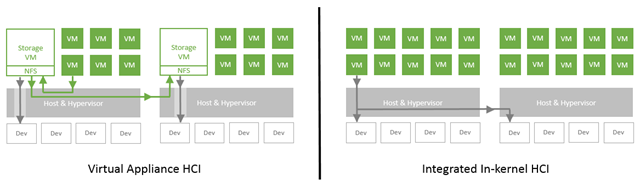

HCI solutions available in today’s market not only offer different data services, but are built differently, which is just one of the many reasons why it is difficult to generalize a typical amount of overhead that is needed to process storage I/O. All HCI solutions will vary (some more than others) on how they provide storage services to the VMs while maintaining resources for guest VM activity. The two basic categories, as illustrated in Figure 1 are:

- Virtual appliance approach. A VM lives on each host in the cluster, delivering a distributed shared storage plane, processing I/O and the other related activities. Depending on the particular HCI solution, this virtual appliance on each host may also be responsible for a number of other duties.

- Integrated/in-kernel approach. The distributed shared storage system is a part of the hypervisor, where key aspects of the storage system are part of the kernel. This allows for virtual machine I/O to traverse through the native kernel I/O path for the hosts participating in that I/O activity.

Figure 1. Comparing an I/O write between HCI architectures (simplified for clarity)

HCI solutions that use a VM to process storage I/O on each host reside in a context (user space) that is no different than application VMs running on the host. In other words, the resources allocated to this virtual appliance to perform system level storage duties, contend for the same resources as the VMs that it is trying to serve. HCI solutions built into the hypervisor maintain end-to-end control and awareness of the I/O. Since an in-kernel, integrated solution allows I/O to traverse through the native kernel I/O path, it uses the least "costly" way to use host resources. HCI solutions built into the kernel minimize the amplification of I/Os and the CPU and memory resources it takes to process those I/Os from end to end. Sometimes virtual appliance based HCI solutions will use devices on hosts configured in the hypervisor for direct pass-through (aka “VMDirectPath”) in an attempt to reduce overhead, but many of the fundamental penalties (especially as they relate to CPU cycles) of I/O amplification through this indirect path and context switching remain.

Addressing a problem in different ways

Why are their multiple approaches? Manufacturers may state many reasons why they chose a specific approach, and why their approach is superior. Most the decision comes from technical limitations and go-to-market pressures. An HCI vendor may not have the access, or the ability to provide this functionality natively in the kernel of a hypervisor. A virtual appliance approach is easier to bring to market, and naturally adaptable to different hypervisors since it is little more than a virtual machine to process storage I/O.

By way of comparison, those who have full ownership of the hypervisor can integrate this functionality directly into the hypervisor, and when appropriate, build some aspects of it right into the kernel, just as other core functionality is built into the kernel. Resource efficiency, hypervisor feature integration, as well as the contextual awareness and control of I/O types are typically the top reasons why it is beneficial to have a distributed storage mechanism built into the hypervisor.

Do both approaches work? Yes. Do both approaches produce the same result in VM behavior and host resource usage? No. Running the same workloads using HCI solutions with these two different architectures may produce very different results on the VMs, and the hosts that serve them. The degree of impact will depend on the technical cost (in resource usage) of the I/O processing, and other data services provided by a given solution.

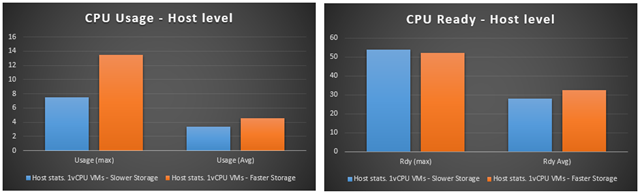

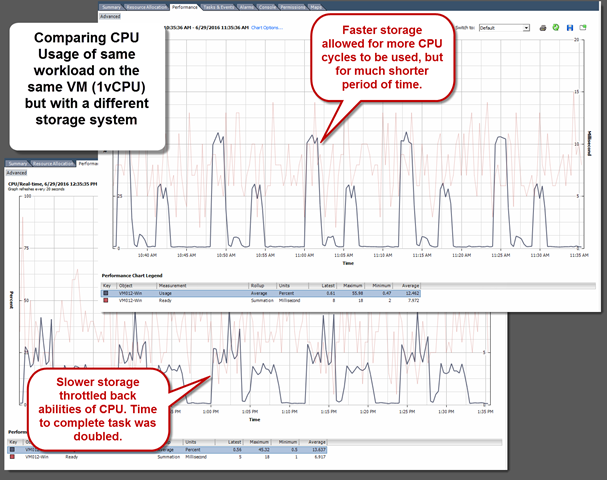

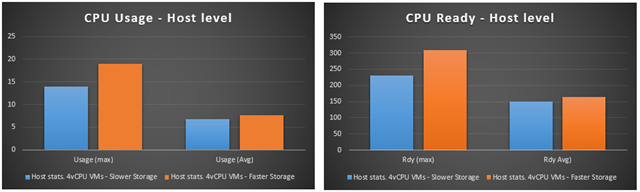

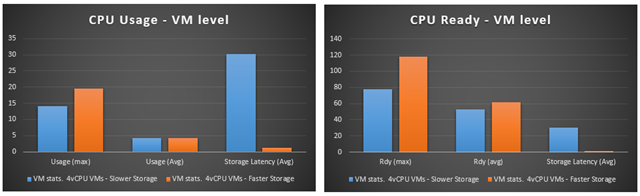

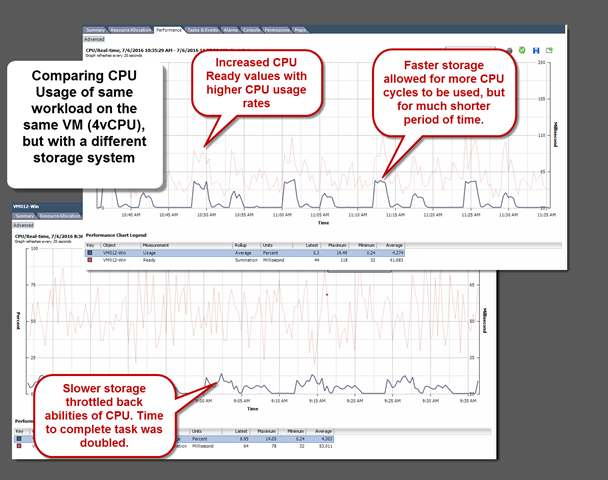

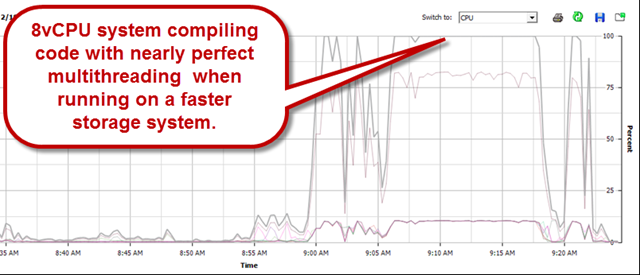

This difference often does not show up until numerous, real workloads are put on these solutions. Just as with a traditional storage array, every solution is fast when there is little to no load on it. What counts is the behavior under real load with contending resources. This is something not always visible with synthetic testing. For HCI environments, the overall “storage efficiency” of the particular HCI solution can be better compared (assuming identical hardware and workloads) by looking at the following in a real HCI environment running production workloads:

- The average number of active VMs per host when running your real workloads.

- The performance characteristics of the VMs and hosts when running your real workloads while hosts are busy serving other workloads.

These measurements above take this topic from an occasionally tiresome academic debate, and demonstrates the differences in real world circumstances. Ironically, faster hardware can increase, not reduce, the differences between these architectural approaches to HCI. This is not unlike what occurs quite often now at the application level, where faster hardware exposes actual bottlenecks in software/application design previously unnoticeable with older, slower hardware.

Now that an explanation has been given as to why "storage efficiency" really means so much more than data services like deduplication and compression, the next post in this series will focus on CPU resources in HCI environments, and what to look out for when observing CPU usage behaviors in HCI environments.

Does the concept of host resource usage interest you? If so, stay tuned for the book, vSphere 6.5 Host Resources Deep Dive by Frank Denneman and Niels Hagoort. It is surely to be a must-have for those interested in the design and optimization of virtualized environments. You can also follow updates from them at @hostdeepdive on Twitter.