In the simplest of terms, performance charts and graphs are nothing more than lines with pretty colors. They exist to provide insight and enable smart decision making. Yet, accurate interpretation is a skill often trivialized, or worse, completely overlooked. Depending on how well the data is presented, performance graphs can amaze or confuse, with hardly a difference between the two.

A vSphere environment provides ample opportunity to stare at all types of performance graphs, but often lost are techniques in how to interpret the actual data. The counterpoint to this is that most are self-explanatory. Perhaps a valid point if they were not misinterpreted and underutilized so often. To appeal to those averse to performance graph overload, many well intentioned solutions offer overly simplified dashboard-like insights. They might serve as a good starting point, but this distilled data often falls short in providing the detail necessary to understand real performance conditions. Variables that impact performance can be complex, and deserve more insight than a green/yellow/red indicator over a large sampling period.

Most vSphere Administrators can quickly view the “heavy hitters” of an environment by sorting the VMs by CPU in order to see the big offenders, and then drill down from there. vCenter does not naturally provide good visual representation for storage I/O. Interesting because storage performance can be the culprit for so many performance issues in a virtualized environment. PernixData FVP accelerates your storage I/O, but also fills the void nicely in helping you understand your storage I/O.

FVP’s metrics leverage VMkernel statistics, but in my opinion make them more consumable. These statistics reported by the hypervisor are particularly important because they are the measurements your VMs and applications feel. Something to keep in mind when your other components in your infrastructure (storage arrays, network fabrics, etc.) may advertise good performance numbers, but don’t align with what the applications are seeing.

Interpreting performance metrics is a big topic, so the goal of this post is to provide some tips to help you interpret PernixData FVP performance metrics more accurately.

Starting at the top

In order to quickly look for the busiest VMs, one can start at the top of the FVP cluster. Click on the “Performance Map” which is similar to a heat map. Rather than projecting VM I/O activity by color, the view will project each VM on their respective hosts at different sizes proportional to how much I/O they are generating for that given time period. More active VMs will show up larger than less active VMs.

(click on images to enlarge)

From here, you can click on the targets of the VMs to get a feel for what activity is going on – serving as a convenient way to drill into the common I/O metrics of each VM; Latency, IOPS, and Throughput.

As shown below, these same metrics are available if the VM on the left hand side of the vSphere client is highlighted, and will give a larger view of each one of the graphs. I tend to like this approach because it is a little easier on the eyes.

VM based Metrics – IOPS and Throughput

When drilling down into the VM’s FVP performance statistics, it will default to the Latency tab. This makes sense considering how important latency is, but I find it most helpful to first click on the IOPS tab to get a feel for how many I/Os this VM is generating or requesting. The primary reason why I don’t initially look at the Latency tab is that latency is a metric that requires context. Often times VM workloads are bursty, and there may be times where there is little to no IOPS. The VMkernel can sometimes report latency against little or no I/O activity a bit inaccurately, so looking at the IOPS and Throughput tabs first bring context to the Latency tab.

The default “Storage Type” breakdown view is a good view to start with when looking at IOPs and Throughput. To simplify the view even more tick the boxes so that only the “VM Observed” and the “Datastore” lines show, as displayed below.

The predefined “read/write” breakdown is also helpful for the IOPS and Throughput tabs as it gives a feel of the proportion of reads versus writes. More on this in a minute.

What to look for

When viewing the IOPS and Throughput in an FVP accelerated environment, there may be times when you see large amounts of separation between the “VM Observed” line (blue) and the “Datastore” (magenta). Similar to what is shown below, having this separation where the “VM Observed” line is much higher than the “Datastore” line is a clear indication that FVP is accelerating those I/Os and driving down the latency. It doesn’t take long to begin looking for this visual cue.

But there are times when there may be little to no separation between these lines, such as what you see below.

So what is going on? Does this mean FVP is no longer accelerating? No, it is still working. It is about interpreting the graphs correctly. Since FVP is an acceleration tier only, cached reads come from the acceleration tier on the hosts – creating the large separation between the “Datastore” and the “VM Observed” lines. When FVP accelerates writes, they are synchronously buffered to the acceleration tier, followed by destaging to the backing datastore as soon as possible – often within milliseconds. The rate at which data is sampled and rendered onto the graph will report the “VM Observed” and “Datastore” statistics that are at very similar times.

By toggling the “Breakdown” to “read/write” we can confirm in this case that the change in appearance in the IOPS graph above came from the workload transitioning from mostly reads to mostly writes. Note how the magenta “Datastore” line above matches up with the cyan “Write” line below.

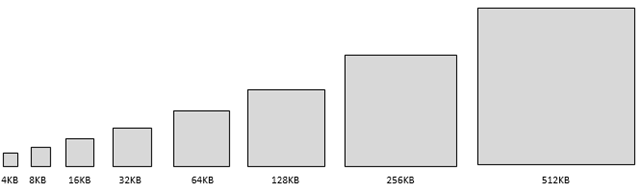

The graph above still might imply that the performance went down as the workload transition from reads to writes. Is that really the case? Well, let’s take a look at the “Throughput” tab. As you can see below, the graph shows that in fact there was the same amount of data being transmitted on both phases of the workload, yet the IOPS shows much fewer I/Os at the time the writes were occurring.

The most common reason for this sort of behavior is OS file system buffer caching inside the guest VM, which will assemble writes into larger I/O sizes. The amount of data read in this example was the same as the amount of data that was written, but measuring that by only IOPS (aka I/O commands per second) can be misleading. I/O sizes are not only underappreciated for their impact on storage performance, but this is a good example of how often the I/O sizes can change, and how IOPS can be a misleading measurement if left on its own.

If the example above doesn’t make you question conventional wisdom on industry standard read/write ratios, or common methods for testing storage systems, it should.

We can also see from the Write Back Destaging tab that FVP destages the writes as aggressively as the array will allow. As you can see below, all of the writes were delivered to the backing datastore in under 1 second. This ties back to the previous graphs that showed the “VM Observed” and the “Datastore” lines following very closely to each other during period with several writes.

The key to understanding the performance improvement is to look at the Latency tab. Notice on the image below how that latency for the VM dropped way down to a low, predictable level throughout the entire workload. Again, this is the metric that matters.

Another way to think of this is that the IOPS and Throughput performance charts can typically show the visual results for read caching better than write buffering. This is because:

- Cached reads never come from the backing datastore, where buffered writes always hit the backing datastore.

- Reads may be smaller I/O sizes than writes, which visually skews the impact if only looking at the IOPS tab.

Therefore, the ultimate measurement for both reads and writes is the latency metric.

VM based Metrics – Latency

Latency is arguably one of the most important metrics to look at. This is what matters most to an active VM and the applications that live on it. Now that you’ve looked at the IOPS and Throughput, take a look at the Latency tab. The “Storage type” breakdown is a good place to start, as it gives an overall sense of the effective VM latency against the backing datastore. Much like the other metrics, it is good to look for separation between the “VM Observed” and “Datastore” where “VM Observed” latency should be lower than the “Datastore” line.

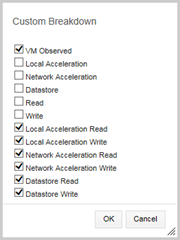

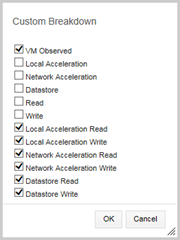

In the image above, the latency is dramatically improved, which again is the real measurement of impact. A more detailed view of this same data can be viewed by selecting a “custom ” breakdown. Tick the following checkboxes as shown below

Now take a look at the latency for the VM again. Hover anywhere on the chart that you might find interesting. The pop-up dialog will show you the detailed information that really tells you valuable information:

- Where would have the latency come from if it had originated from the datastore (datastore read or write)

- What has contributed to the effective “VM Observed” latency.

What to look for

The desired result for the Latency tab is to have the “VM Observed” line as low and as consistent as possible. There may be times where the VM observed latency is not quite as low as you might expect. The causes for this are numerous, and subject for another post, but FVP will provide some good indications as to some of the sources of that latency. Switching over to the “Custom Breakdown” described earlier, you can see this more clearly. This view can be used as an effective tool to help better understand any causes related to an occasional latency spike.

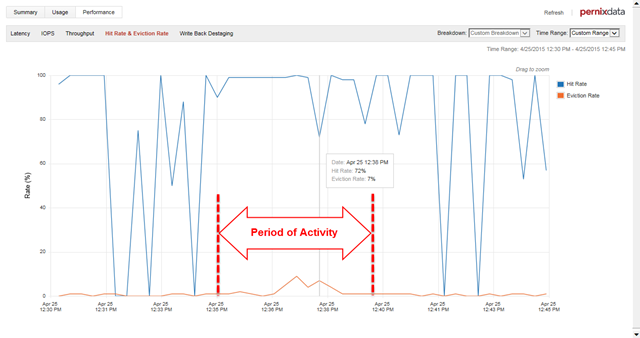

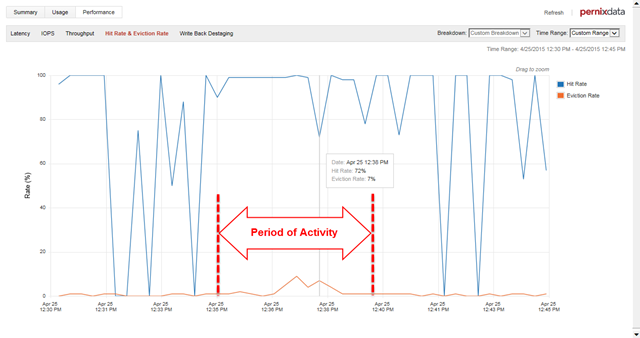

Hit & Eviction rate

Hit rate is the percentage of reads that are serviced by the acceleration tier, and not by the datastore. It is great to see this measurement high, but is not the exclusive indicator of how well the environment is operating. It is a metric that is complimentary to the other metrics, and shouldn’t be looked at in isolation. It is only focused on displaying read caching hit rates, and conveys that as a percentage; whether there are 2,000 IOPS coming from the VM, or 2 IOPS coming from the VM.

There are times where this isn’t as high as you’d think. Some of the causes to a lower than expected hit rate include:

- Large amounts of sequential writes. The graph is measuring read “hits” and will see a write as a “read miss”

- Little or no I/O activity on the VM monitored.

- In-guest activity that you are unaware of. For instance, an in-guest SQL backup job might flush out the otherwise good cache related to that particular VM. This is a leading indicator of such activity. Thanks to the new Intelligent I/O profiling feature in FVP 2.5, one has the ability to optimize the cache for these types of scenarios. See Frank Denneman’s post for more information about this feature.

Lets look at the Hit Rate for the period we are interested in.

You can see from above that the period of activity is the only part we should pay attention to. Notice on the previous graphs that outside of the active period we were interested in, there was very little to no I/O activity

A low hit rate does not necessarily mean that a workload hasn’t been accelerated. It simply provides and additional data point for understanding. In addition to looking at the hit rate, a good strategy is to look at the amount of reads from the IOPS or Throughput tab by creating the custom view settings of:

Now we can better see how many reads are actually occurring, and how many are coming from cache versus the backing datastore. It puts much better context around the situation than relying entirely on Hit Rate.

Eviction Rate will tell us the percentage of blocks that are being evicted at any point and time. A very low eviction rate indicates that FVP is lazily evicting data on an as needed based to make room for new incoming hot data, and is a good sign that the acceleration tier size is sized large enough to handle the general working set of data. If this ramps upward, then that tells you that otherwise hot data will no longer be in the acceleration tier. Eviction rates are a good indicator to help you determine of your acceleration tier is large enough.

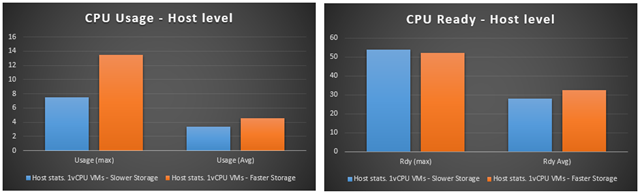

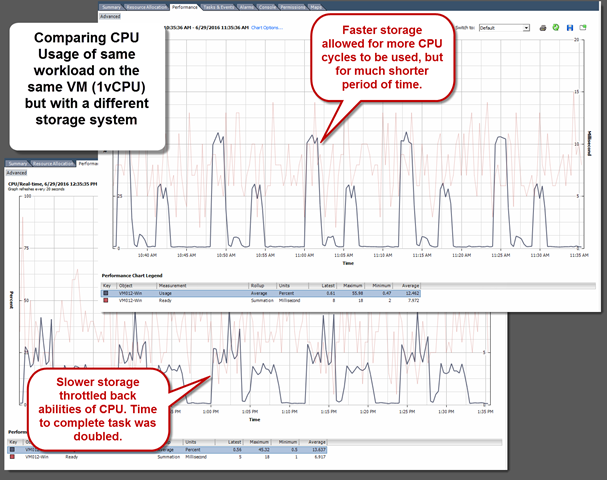

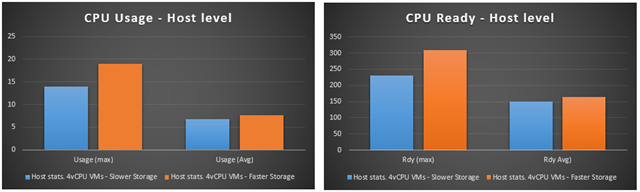

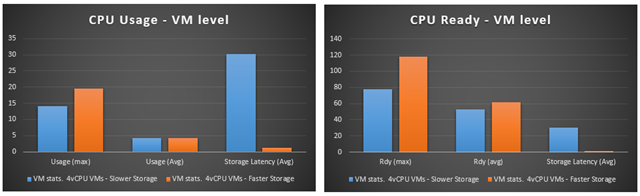

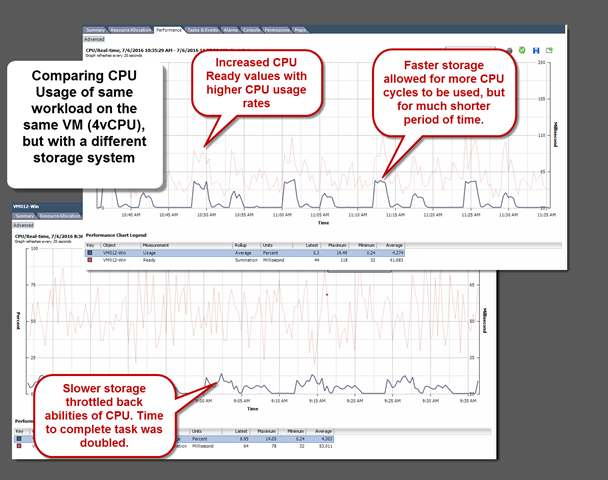

The importance of context and the correlation to CPU cycles

When viewing performance metrics, context is everything. Performance metrics are guilty of standing on their own far too often. Or perhaps, it is human nature to want to look at these in isolation. In the previous graphs, notice the relationship between the IOPS, Throughput, and Latency tabs. They all play a part in delivering storage payload.

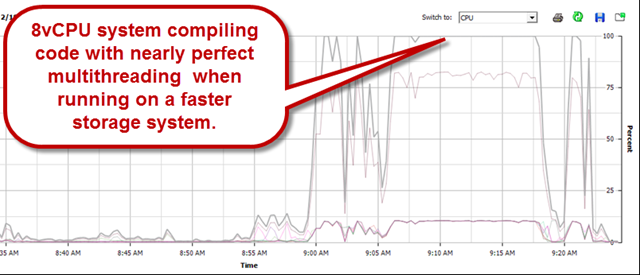

Viewing a VM’s ability to generate high IOPS and Throughput are good, but this can also be misleading. A common but incorrect assumption is that once a VM is on fast storage that it will start doing some startling number of IOPS. That is simply untrue. It is the application (and the OS that it is living on) that is dictating how many I/Os it will be pushing at any given time. I know of many single threaded applications that are very I/O intensive, and several multithreaded applications that aren’t. Thus, it’s not about chasing IOPS, but rather, the ability to deliver low latency in a consistent way. It is that low latency that lets the CPU breath freely, and not wait for the next I/O to be executed.

What do I mean by “breath freely?” With real world workloads, the difference between fast and slow storage I/O is that CPU cycles can satisfy the I/O request without waiting. A given workload may be performing some defined activity. It may take a certain number of CPU cycles, and a certain number of storage I/Os to accomplish this. An infrastructure that allows those I/Os to complete more quickly will let more CPU cycles to take part in completing the request, but in a shorter amount of time.

Looking at CPU utilization can also be a helpful indicator of your storage infrastructure’s ability to deliver the I/O. A VM’s ability to peak at 100% CPU is often a good thing from a storage I/O perspective. It means that VM is less likely to be storage I/O constrained.

Summary

The only thing better than a really fast infrastructure for your workloads is understanding how well it is performing. Hopefully this post offers up a few good tips when you look at your own workloads leveraging PernixData FVP.

While VMware vSAN has proven to be extremely well suited for converging all applications into the same environment, business requirements may dictate a need for self contained, independent environments isolated in some manner from the rest of the data center. In "Cost Effective Independent Environments using vSAN" found on VMware’s StorageHub, I walk through four examples that show how business requirements may warrant a cluster of compute and storage dedicated for a specific purpose, and why vSAN is an ideal solution. The examples provided are:

While VMware vSAN has proven to be extremely well suited for converging all applications into the same environment, business requirements may dictate a need for self contained, independent environments isolated in some manner from the rest of the data center. In "Cost Effective Independent Environments using vSAN" found on VMware’s StorageHub, I walk through four examples that show how business requirements may warrant a cluster of compute and storage dedicated for a specific purpose, and why vSAN is an ideal solution. The examples provided are: