I’ve been using Veeam Backup and Recovery in my production environment for a while now, and in hindsight, it was one of the best investments we’ve ever made in our IT infrastructure. It has completely changed the operational overhead of protecting our VMs, and the data they serve up. Using a data protection solution that utilizes VMware’s APIs provides the simplicity and flexibility that was always desired. Moving away from array based features for protection has enabled the protection of VMs to better reflect desired RPO and RTO requirements – not by the limitations imposed by LUN sizes, array capacity, or functionality.

While Veeam is extremely simple in many respects, it is also a versatile, feature packed application that can be configured a variety of different ways. The versatility and the features can be a little confusing to the new user, so I wanted to share 25 tips that will help make for a quick and successful deployment of Veeam Backup and Recovery in your environment.

First lets go over a few assumptions that will be the basis for my recommendations:

- There are two sites that need protection.

- VMs and data need to be protected at each site, locally.

- VMs and data need to be protected at each site, remotely.

- A NAS target exists at each site.

- Quick deployment is important.

- You’ve already read all of the documentation.

Architecture

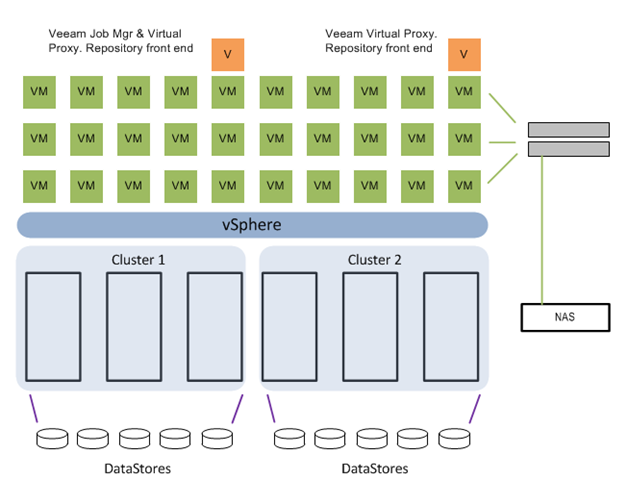

There are a number of different ways to set up the architecture for Veeam. I will show a few of the simplest arrangements:

In this arrangement below there would be no physical servers – only a NAS device. This is a simplified arrangement of what I use. If one wanted a rebuilt server (Windows or Linux) acting purely as a storage target, that could be in place of where you see the NAS. The architecture would stay the same.

Optionally, a physical server not just acting as a storage target, but also as a physical proxy would look something like this below:

Below is a combination of both, where a physical server is acting as the Proxy, but like the virtual proxy, is using an SMB share to house the data. In this case, a NAS unit.

Implementation tips

These tips focus not so much on ultimately what may suite your environment best (only you know that) or leveraging all of the features inside the product, but rather, getting you up and running as quickly as possible so you can start returning great results.

Job Manager Servers & Proxies

1. Have the job Manager server, any proxies, and the backup targets living on their own VLAN for a dedicated backup network.

2. Set up SNMP monitoring on any physical ports used in the backup arrangement. It will be helpful to understand how utilized the physical links get, and for how long.

3. Make sure to give the Job Manager VM enough resources to play with – especially if it will have any data mover/proxy responsibilities. The deployment documentation has good information on this, but for starters, make it 4vCPU with 5GB of RAM.

4. If there is more than one cluster to protect, consider building a virtual proxy inside each cluster that it will be responsible for protecting, then assign it to jobs that protect VMs in that cluster. In my case, I use PernixData FVP in two clusters. I have the data stores that house those VMs only accessible by their own cluster (a constraint of FVP). Because of that, I have a virtual proxy living in each cluster, with backup jobs configured so that it will use a specific virtual proxy. These virtual proxies have a special setting in FVP that will instruct the VMs being backed up to flush their write cache to the backing storage

Storage and Design

5. Keep the design simple, even if you know you will need to adjust at a later time. Architectural adjustments are easy to do with Veeam, so go ahead and get Veeam pointed to the target, and start running some jobs. Use this time to get familiar with the product, and begin protecting the jewels as quickly as possible.

6. Let Veeam use the default SQL Server Express instance on the Veeam Job Manager VM. This is a very reasonable, and simple configuration that should be adequate for a lot of environments.

7. Question whether a physical proxy is needed. Typically physical proxies are used for one of three reasons. 1.) They offload job processing CPU cycles from your cluster. 2.) In simple arrangements a Windows based Physical proxy might also be the Repository (aka storage target). 3.) They allow for one to leverage a "direct-from-SAN" feature by plugging in the system to your SAN fabric. The last one in my opinion introduces the most hesitation. Here is why:

- Some storage arrays do not have a "read-only" iSCSI connection type. When this is the case, special care needs to be taken on the physical server directly attached to the SAN to ensure that it cannot initialize the data store. The reality is that you are one mistake away from having a very long day in front of you. I do not like this option when there is no secondary safety mechanism from the array on a "read-only" connection type.

- Direct-from-SAN access can be a very good method for moving data to your target. So good that it may stress your backing storage enough (via link saturation or physical disk limits) to perhaps interfere with your production I/O requirements.

- Additional efforts must be taken when using write buffering mechanisms that do not live on the storage array (e.g. PernixData) .

8. Veeam has the ability to back up to an SMB share, or an NFS mount. If an NFS mount is chosen, make sure that it is a storage target running native Linux. Most NAS units like a Synology are indeed just a tweaked version of Linux, and it would be easy to conclude that one should just use NFS. However, in this case, you may run into two problems.

- The SMB connection to a NAS unit will likely be faster (which most certainly is the first time in history that an SMB connection is faster than an NFS connection) .

- The Job Manager might not be able to manage the jobs on that NAS unit (connected via NFS) properly. This is due to BusyBox and Perl on the Synology not really liking each other. For me, this resulted in Veeam being unable to remove sun setting backups. Changing over to an SMB connection on the NAS improved the performance significantly, and allowed for job handling to work as desired.

9. Veeam has a great new feature (version 7.x) called a "Backup Copy" job, which allows for the backup made locally to be shipped to a remote site. The "Backup Copy" job achieves one of the most basic requirements of data protection in the simplest of ways. Two copies of the data at two different locations, but with the benefit of only processing the backup job once. It is a new feature of Version 7, and although it is a great feature, it behaves differently, and warrants some time spent before putting into production. For a speedy deployment, it might be best simply to configure two jobs. One to a local target, and one to a remote target. This will give you the time to experiment with the Backup Copy job feature.

10. There are compelling reasons for and against using a rebuilt server as a storage target, or using a NAS unit. Both are attractive options. I ended using a dedicated NAS unit. It’s form factor, drive bay count, and the overall cost of provisioning was the only option that could match my requirements.

Operations

11. In Veeam B&R, "Replication Jobs" are different than "Backup Jobs." Instead of trying to figure out all of the nuances of both right away, use just the "Backup job" function with both local and remote targets. This will give you time to better understand the characteristics of the replication functionality. One also might find that the "Backup Job" suites the environment and need better than the replication option.

12. If there are daily backups going to both local and offsite targets (and you are not using the "Backup Copy" option, have them run 12 hours apart from one another to reduce RPOs.

13. Build up a test VM to do your testing of a backup and restore. Restore it in the many ways that Veeam has to offer. Best to understand this now rather than when you really need to.

14. I like the job chaining/dependency feature, which allows you to chain multiple jobs together. But remember that if a job is manually started, it will run through the rest of the jobs too. The easiest way to accommodate this is to temporarily remove it from the job chain.

15. Your "Backup Repository" is just that, a repository for data. It can be a Windows Server, a Linux Server, or an SMB share. If you don’t have a NAS unit, stuff an old server (Windows or Linux) with some drives in it and it will work quite well for you.

16. Devise a simple, clear job naming scheme. Something like [BackupType]-[Descriptive Name]-[TargetLocation] will quickly tell you what it is and where it is going to. If you use folders in vCenter to organize your VMs, and your backups reflect the same, you could also choose to use the folder name. An example would be "Backup-SharePointFarm-LOCAL" which quickly and accurately describes the job.

17. Start with a simple schedule. Say, once per day, then watch the daily backup jobs and the synthetic fulls to see what sort of RPO/RTOs are realistic.

18. Repository naming. Be descriptive, but come up with some naming scheme that remains clear even if you aren’t in the application for several weeks. I like indicating the location of the repository, if it is intended for local jobs, or remote jobs, and what kind of repository it is (Windows, Linux, or SMB). For example: VeeamRepo-[LOCATION]-for-Local(SMB)

19. Repository organization. Create a good tree structure for organization and scalability. Veeam will do a very good job at handling the organization of the backups once you assign a specific location (share name) on a repository. However, create a structure that provides the ability to continue with the same naming convention as your needs evolve. For instance, a logical share name assigned to a repository might be \\nas01\backups\veeam\local\cluster1 This arrangement allows for different types of backups to live in different branches.

20. Veeam might prevent the ability of creating more than one repository going to the same share name (it would see \\nas01\backups\veeam\local\cluster1 and \\nas01\backups\veeam\local\cluster2 as the same). Create DNS aliases to fool it, then make those two targets something like \\nascluster1\backups\veeam\local\cluster1 and \\nascluster2\backups\veeam\local\cluster2

21. When in doubt, leave the defaults. Veeam put in great efforts to make sure that you, or the software doesn’t trip over itself. Uncertain of job number concurrency? Stick to the default. Wondering about which backup mode to use? (Reverse Incremental versus Incrementals with synthetic fulls). Stay with the defaults, and save the experimentation for later.

22. Don’t overcomplicate the schedule (at least initially). Veeam might give you flexibility that you never had with array based protection tools, but at the same time, there is no need to make it complicated. Perhaps group the VMs by something that you can keep track of, such as the folders they are contained in within vCenter.

23. Each backup job can be adjusted so that whatever target you are using, you can optimize it for preset storage optimization type. WAN target, LAN target, or local target. This can easily be overlooked, but will make a difference in backup performance.

24. How many backups you can keep is a function of change range, frequency, dedupe and compression, and the size of your target. Yep, that is a lot of variables. If nothing else, find some storage that can serve as the target for say, 2 weeks. That should give a pretty good sampling of all of the above.

25. Take one item/feature once a week, and spend an hour or two looking into it. This will allow you to find out more about say, Changed block tracking, or what the application aware image processing feature does. Your reputation (and perhaps, your job) may rely on your ability to recover systems and data. Come up with a handful of scenarios and see if they work.

Veeam is an extremely powerful tool that will simplify your layers of protection in your environment. Features like SureBackup, Virtual Labs, and their Replication offerings are all very good. But more than likely, they do not need to be a part of your initial deployment plan. Stay focused, and get that new backup software up and running as quickly as possible. You, and your organization, will be better off for it.

– Pete

I completely agree that VEEAM has revolutionized how we do things. Great product, great support, and great price…. whats not to love. Every company in the world should take a page from their book on pricing… I mean sheesh have you ever tried to figure out licensing with Microsoft, you need a PHD in license-crap-ology.

Yeah, it’s annoying that equallogic can’t do a read only setting. When I train on veeam I find myself saying things over and over again, like don’t ever ever mess with the volumes or you will overwrite the vmfs and you will be screwed.

I guess we have a new mission! Try to convince Equallogic to add read only iSCSI. I know this guy wrote to them in 2010 http://www.modelcar.hk/?p=2938 … hmm we are probably screwed if they haven’t done it by now.

Here’s my post though, please promote and create a similar post and I will promote

http://michaelellerbeck.com/2014/02/25/dell-equallogic-feature-request-to-equallogic-please-add-read-only-to-specific-iscsi-initiator/

Then again, I’m kindof of scared by the whole hot add process also! But, I have never been bit by it… and haven’t ever heard of anyone being bit by it 🙂

One thing that has hit me recently is that VEEAM will use pretty much any resources you can give it. Don’t think of it as a ‘backup’ server (We would usually just throw what ever spare hardware we had at it) go out and buy some good hardware and get all the benefits of veeam!

Wondering about the comment, “When I train on veeam I find myself saying things over and over again, like don’t ever ever mess with the volumes or you will overwrite the vmfs and you will be screwed.”

If I understand the concern correctly, you are worried that Windows will attempt to initialize the VMFS volume unless automount=disable is enabled, when Veeam mounts it during a VAAI leveraged backup? (Not sure where you do this).

Isn’t it possible to use the EQL SnapShots as backup targets? That way you aren’t messing with the production data? I’m on FW 6.0.6 and an option under Group\Volumes\Volume\Snapshots\Set Access Type is Set RW or Set RO?

Getting ready to migrate to 7.0.2 for my PS6000s and PS6100. I have to update MEM to 1.2 first and using VUM for that. I read the release notes and I didn’t see anything specifically about adding RO enhancements for volumes unfortunately.

I especially like this comment in the Release notes for 7.0.x:

Firmware Update Process Might Not Complete

Under rare conditions, during the restart process of a firmware update, the array might fail to come up. If this occurs, the following error will be shown in the console: LV2: log failure -read_non_existing_volume_id: invalid PsvId, and you should contact your Dell support representative.

That sure builds confidence. Luckily I have a “spare” array that I can test 7.0.2 on so I’ll report back if I have any issues.

Very good question Dave.

The real issue ONLY comes up if you have a backup server directly connected to your SAN switchgear, and thus, your storage arrays. During the time, that physical Proxy (a Windows Server) will want to connect directly to the VMFS volume. If the preventative measures (purely based on the OS of the physical proxy) are not set correctly, the Windows server can initialize the VMFS volume and make it unusable by the vSphere cluster. We all know how updates, or GPO settings can unexpectedly change things on a system that we never intentioned. This is why I feel it is such a dangerous practice unless there is a preventative measure on the SAN array to protect access.

The only read-only controls the EqualLogic arrays have are, as you mentioned, by volume. Whether they are a regular volume, or an array based snapshot turned online. There is currently no “read-only” controls by iSCSI connection or endpoint. In order for Veeam to integrate with some of EqualLogics features, it would most likely need a plug-in like it has for HP based storage. I’m sure that would be nice, but there are some engineering complexities that the two parties would need to address. A simple solution to mitigate this specific risk would be to simply make a read-only connection type. I’ve provided this feedback to the product team, so hopefully it will be on the roadmap soon.

Hey, you made the ‘

THE WORD FROM GOSTEV’

Nice!

I have a VMware Essentials plus cluster that I am trying to use Backup copy jobs to copy to a remote NAS over a 1GB fiber connection. Can you please elaborate more on using a separate VLAN for the backup network. Specifically setting it up. Do you have more than one NIC in your backup server? I am having a problem with backup copy jobs saturating my network – causing device disconnects, etc. My environment is essentially the same as your drawings –

Veeam BR running on a virtual WIN2008R2 16GB RAM. iSCSI connection to NAS storage. Data stores are on an HP P2000 G3 MSA 6GB SAS (can’t backup directly from storage)

Backups work fine. Its the remote copy jobs that kill my network

Hi Jeff. Thanks for reading. Here are some responses to your questions:

VLANs

The idea here is that if your hosts support it, set things up in such a way that you minimize interference with production VMs and control plane traffic (vSphere Mgmt, etc.), and segment the traffic as much as possible. This is done in two parts; The switch/network config, and the vSphere host vswitch/uplink configs. I like to have a dedicated “Backup Network” VLAN on my switches (trunked/tagged for the vSphere hosts, and access ports for any storage targets) to segment the traffic to it’s own VLAN with it’s own subnet associated with it (e.g. VLAN 9 assigned to 10.10.9.x /24). Yes, the switches still have to process the traffic, but at least its not on the same broadcast domain. Then, if your hosts support it, have a pair of uplinks dedicated to it’s own vSwitch, and place a “Backup Network” portgroup on that vSwitch (set VST VLAN to whatever VLAN ID you will be using). This will help eliminate any backup traffic off of the uplinks for any other services (production VMs, vSphere Mgmt, etc.)

For my virtual backup proxies, I don’t have more than one NIC configured inside the VM, but have multiple virtual backup proxy VMs (one for each cluster). That is the better way to spread the resources.

Backup Copy Job

The Backup Copy jobs are the new’ish feature that look great on paper (I was thrilled when the feature was announced), but just don’t seem ready for prime time (note the issues on the forums). There are a couple of design flaws that seem to hurt production environments. What I might recommend is this. Shelve the Backup Copy Job mechanism for the time being. Your goal is to get functioning backups asap. You can optimize later. Create a secondary standard backup job that is very similar to your local backup job, but set the target for the remote device, and the network optimization to “WAN.” Then sit back and observe times for the initial full backup/seed, incrementals, and then any synthetic fulls. Note the times. Then, experiment with the backup copy job with a smaller sampling of VMs. Observe the behaviors that way. Yes, this introduces a second time in which a job needs to be processed (doubling the times it needs to snapshot the VM, and process the backup job, but it is very reliable. For the record, I still use a standard, second backup job to a remote target, and will probably continue to do so until I see some improvement in some of the logic of the Backup Copy Job feature.

Hi Pete thanks for your reply. So, I am trying to get my head around this. Unfortunately I currently don’t have any available uplinks on my host servers – I am planning on adding an additional 4 port GB NIC to each server as soon as I can work out how I’m going to set this up. That should give me the ability to set up the backup VLAN as you describe. I’ve got to figure out the tagging of the fiber link between sites as this is something that is being handled by switches at each site and then a firewall at each site. Need to talk to my firewall guru to see if we can make this work. Do you have any more details on setting up the VLANs between sites? I’m thinking I have my this set up wrong – I’d love to be able to send you a couple of drawings showing the current set up and get your feed back. Is this possible?

Thanks

Hi Jeff,

No need to set up a VLAN across the link. The traffic should simply be routed across from the source, to the target. This is the most common method, as tunneling multiple VLANs across a WAN link usually involves implementing VTP on Cisco-only routers, etc. In your case none of that is necessary.

Hi Jeff,

This is a brilliant article, and as someone who is new to Veeam, you’re saving me lots of time. Thanks for the diagrams as well, as they make this so much easier to understand.

Apologies, I put the wrong name. I meant Pete.