There are a number of things that I don’t miss with old physical infrastructures. One near the top of the list is a general lack of visibility for each and every system. Horribly underutilized hardware running happily along side overtaxed or misconfigured systems, and it all looked the same. Fortunately, virtualization has changed much of that nonsense, and performance trending data of VMs and hosts are a given.

Partners in the VMware ecosystem are able to take advantage of the extensibility by offering useful tools to improve management and monitoring of other components throughout the stack. The Dell Management Plug-in for VMware vCenter is a great example of that. It does a good job of integrating side-band management and event driven alerting inside of vCenter. However, in many cases you still need to look at performance trending data of devices that may not inherently have that ability on it’s own. Switchgear is a great example of a resource that can be left in the dark. SNMP can be used to monitor switchgear and other types of devices, but it’s use is almost always absent in smaller environments. But there are simple options to help provide better visibility even for the smallest of shops. This post will provide what you need to know to get started.

In this example, I will be setting up a general purpose SNMP management system running Cacti to monitor the performance of some Dell PowerConnect switchgear. Cacti leverages RRDTool’s framework to deliver time based performance monitoring and graphing. It can monitor a number of different types of systems supporting SNMP, but switchgear provides the best example that most everyone can relate to. At a very affordable price (free), Cacti will work just fine in helping with these visibility gaps.

Monitoring VM

The first thing to do is to build a simple Linux VM for the purpose of SNMP management. One would think there would be a free Virtual Appliance out on the VMware Virtual Appliance Marektplace for this purpose, but if there is, I couldn’t find it. Any distribution will work, but my instructions will cater toward the Debian distributions – particularly Ubuntu, or a Ubuntu clone like Linux Mint (my personal favorite). Set it for 1vCPU and 512 MB of RAM. Assign it a static address on your network management VLAN (if you have one). Otherwise, your production LAN will be fine. While it is a single purpose built VM, you still have to live with it, so no need to punish yourself by leaving it bare bones. Go ahead and install the typical packages (e.g. vim, ssh, ntp, etc.) for convenience or functionality.

Templates are an option that extend the functionality in Cacti. In the case of the PowerConnect switches, the template will assist in providing information on CPU, memory, and temperature. A template for the PowerConnect 6200 line of switches can be found here. The instructions below will include how to install this.

Prepping SNMP on the switchgear

In the simplest of configurations (which I will show here), there really isn’t much to SNMP. For this scenario, one will be providing read-only access of SNMP via a shared community name. The monitoring VM will poll these devices and update the database accordingly.

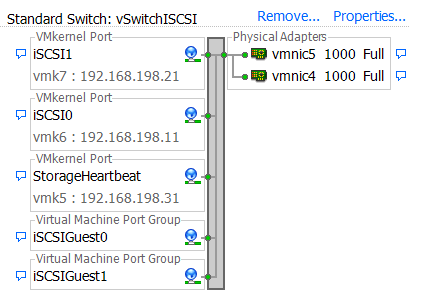

If your switchgear is isolated, as your SAN switchgear might be, then there are a few options to make the switches visible in the right way. Regardless of what option you use, the key is to make sure that your iSCSI storage traffic lives on a different VLAN from your management interface of the device. I outline a good way to do this at “Reworking my PowerConnect 6200 switches for my iSCSI SAN”

There are a couple of options in connecting the isolated storage switches to gather SNMP data:

Option 1: Connect a dedicated management port on your SAN switch stack back to your LAN switch stack.

Option 2: Expose the SAN switch management VLAN using a port group on your iSCSI vSwitch.

I prefer option 1, but regardless, if it is iSCSI switches you are dealing with, you will want to make sure that management traffic is on a different VLAN than your iSCSI traffic to maintain the proper isolation of iSCSI traffic.

Once the communication is in place, just make a few changes to your PowerConnect switchgear. Note that community names are case sensitive, so decide on a name, and stick with it.

enable

configure

snmp-server location "Headquarters"

snmp-server contact "IT"

snmp-server community mycompany ro ipaddress 192.168.10.12

Monitoring VM – Pre Cacti configuration

Perform the following steps on the VM you will be using to install Cacti.

1. Install and configure SNMPD

apt-get update

mv /etc/snmp/snmpd.conf /etc/snmp/snmpd.conf.old

2. Create a new /etc/snmp/snmpd.conf with the following contents:

rocommunity mycompanyt

syslocation Headquarters

syscontact IT

3. Edit /etc/default/snmpd to allow snmpd to listen on all interfaces and use the config file. Comment out the first line below and replace it with the second line:

SNMPDOPTS=’-Lsd -Lf /dev/null -u snmp -g snmp -I -smux -p /var/run/snmpd.pid 127.0.0.1′

SNMPDOPTS=’-Lsd -Lf /dev/null -u snmp -g snmp -I -smux -p /var/run/snmpd.pid -c /etc/snmp/snmpd.conf’

4. Restart the snmpd daemon.

sudo /etc/init.d/snmpd restart

5. Install additional perl packages:

apt-get install libsnmp-perl

apt-get install libnet-snmp-perl

Monitoring VM – Cacti Installation

6. Perform the following steps on the VM you will be using to install Cacti.

apt-get update

apt-get install cacti

During the installation process, MySQL will be installed, and the installation will ask what you would like the MySQL root password to be. Then the installer will ask what you would like cacti’s MySQL password to be. Choose passwords as desired.

Now, the Cacti installation is available via http://[cactiservername]/cacti with a username and password of "admin" Cacti will now ask you to change the admin password. Choose whatever you wish.

7. Download PowerConnect add-on from http://docs.cacti.net/usertemplate:host:dell:powerconnect:62xx and unpack both zip files

8. Import the host template via the GUI interface. Log into Cacti, and go to Console > Import Templates, select the desired file (in this case, cacti_host_template_dell_powerconnect_62xx_switch.xml), and click Import.

9. Copy the 62xx_cpu.pl script into the Cacti script directory on server (/usr/share/cacti/site/scripts). This may need executable permissions. If you downloaded it to a Windows machine, but need to copy it to the Linux VM, WinSCP works nicely for this.

10. Depending on how things were copied, there might be some line endings in the .pl file. You can clean up that 62xx_cpu.pl file by running the following:

dos2unix 62xx_cpu.pl

Using Cacti

You are now ready to run Cacti so that you can connect and monitor your devices. This example shows how to add the device to Cacti, then monitor CPU and a specific data port on the switch.

1. Launch Cacti from your workstation by browsing out to http://[cactiservername]/cacti and enter your credentials.

2. Create a new Graph Tree via Console > Graph Trees > Add. You can call it something like “Switches” then click Create.

3. Create a new device via Console > Devices > Add. Give it a friendly description, and the host name of the device. Enter the SNMP Community name you decided upon earlier. In my example above, I show the community name as being “mycompany” but choose whatever fits. Remember that community names are case sensitive.

4. To create a graph for monitoring CPU of the switch, click Console > Create New Graphs. In the host box, select the device you just added. In the “Create” box, select “Dell Powerconnect 62xx – CPU” and click Create to complete.

5. To create a graph for monitoring a specific Ethernet port, click Console > Create New Graphs. In the Host box, select the device you just added. Put a check mark next to the port number desired, and select In/Out bits with total bandwidth. Click Create > Create to complete.

6. To add the chart to the proper graph tree, click Console > Graph Management. Put a check mark next to the Graphs desired, and change the “Choose and action” box to “Place on a Tree [Tree name]”

Now when you click on Graphs, you will see your two items to be monitored

By clicking on the magnifying glass icon, or by the “Graph Filters” near the top of the screen, one can easily zoom or zoom out to various sampling periods to suite your needs.

Conclusion

Using SNMP and a tool like Cacti can provide historical performance data for non virtualized devices and systems in ways you’ve grown accustomed to in vSphere environments. How hard are your switches running? How much internet bandwidth does your organization use? This will tell you. Give it a try. You might be surprised at what you find.